Integrating Docker Into Everyday Workflow: Practical Guide

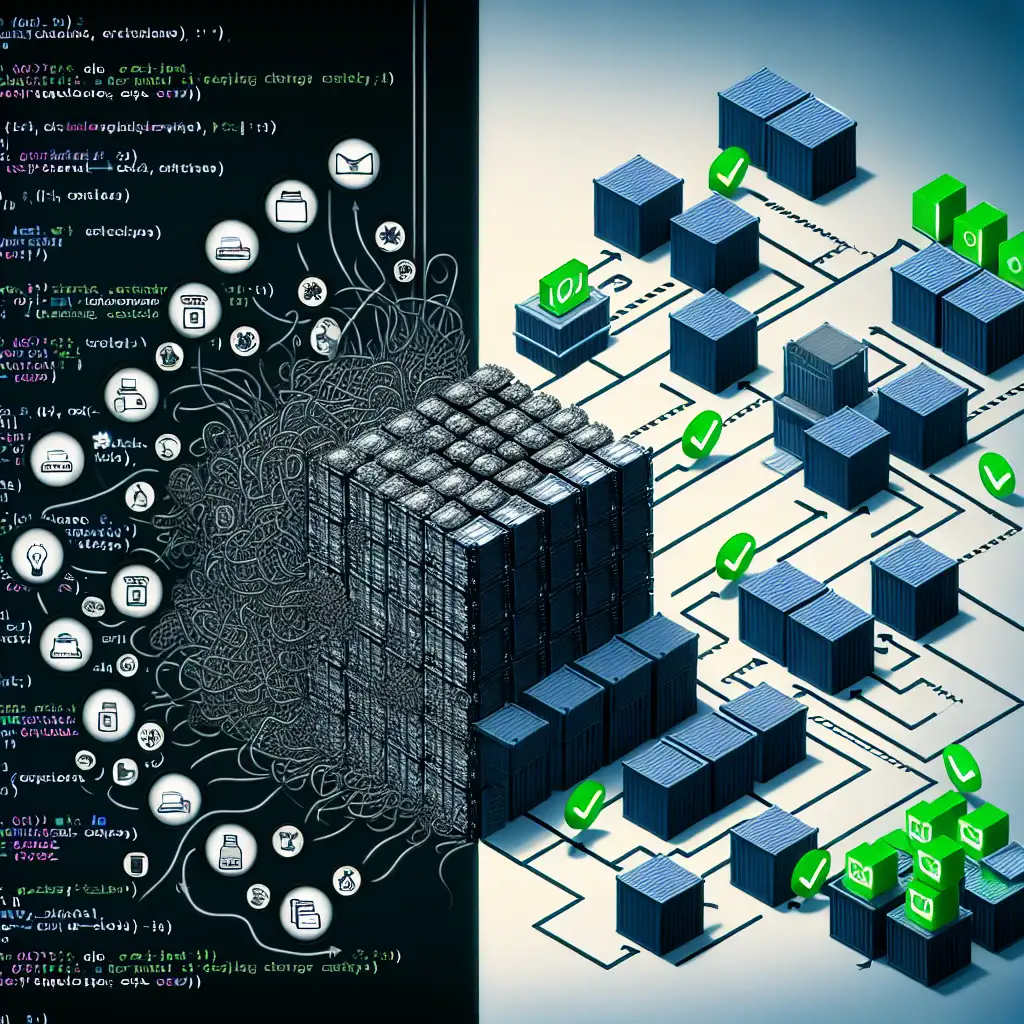

Consider the following: code review finishes, feature merged, but QA encounters issues that never appeared locally. Configuration drift and inconsistent dependencies sabotage confidence and waste cycles—classic “works on my machine” syndrome. Docker, properly integrated, eliminates these variables. It goes well beyond single-image packaging; the target is identical, reproducible environments at every phase—coding, testing, deployment.

Beyond Basic Containerization

Most projects “Dockerize” only partially—building images for prod but not embracing containers earlier. The difference: adopt Docker for daily dev, for CI test runners, for running supporting databases, for packaging artifacts. Critics worry about performance or developer friction; but with the right patterns, overhead drops to negligible.

Why Bother?

- Host Parity: Application + DB + cache, all containerized, eliminates surprise discrepancies.

- Onboarding Time: Full environment spins up from zero with one command.

- Isolation: Local machine pollution (leftover binaries, orphaned processes) disappears.

- Pipeline Uniformity: CI, staging, and production operate with identical runtime builds.

Step 1: Construct a Reliable Dockerfile

Here’s a streamlined Dockerfile for a Node.js (18.x) service:

FROM node:18.18-alpine

WORKDIR /usr/src/app

COPY package.json package-lock.json ./

RUN npm ci --omit=dev

COPY . .

ENV NODE_ENV=production

EXPOSE 3000

CMD ["node", "index.js"]

Notes:

npm cienforces lockfile fidelity—considerably more deterministic thaninstall.--omit=devkeeps images minimal.- Alpine images reduce footprint but may cause issues for packages with native dependencies (e.g., bcrypt); compile errors? Try

node:18-slim. - Build context (

COPY . .) can sometimes leak unwanted files—use.dockerignore:

node_modules

*.log

.git

Step 2: Compose for the Real World

Development isn’t just the app. Consider cache layers, databases, search. A practical docker-compose.yml:

version: '3.8'

services:

app:

build:

context: .

dockerfile: Dockerfile

ports:

- "3000:3000"

volumes:

- .:/usr/src/app

- /usr/src/app/node_modules # Prevents host node_modules from masking container's

environment:

- NODE_ENV=development

depends_on:

- mongo

command: npm run dev # nodemon or ts-node-dev typical

mongo:

image: mongo:6.0.13

ports:

- "27017:27017"

volumes:

- mongo-data:/data/db

volumes:

mongo-data:

Trade-off: Docker volume mounts offer live code reload—excellent for Node.js, sometimes quirks with file events on WSL2/macOS. Occasional need to clear volumes if the data schema changes. Restart containers if modules seem stale.

Step 3: Hide Complexity Behind Scripts

No one should type multi-line Docker commands repeatedly. Hide skeletons in Makefile or package.json scripts:

"scripts": {

"dc:up": "docker-compose up --build",

"dc:down": "docker-compose down -v",

"dc:test": "docker-compose run --rm app npm test",

"dc:logs": "docker-compose logs -f app"

}

Gotcha: docker-compose run creates a new container every time—resetting state. For stateful testing, use exec into the running container or rely on persistent volumes.

Or, Makefile for UNIXy teams:

up:

docker-compose up -d --build

down:

docker-compose down -v

test:

docker exec -it $$(docker-compose ps -q app) npm test || true

logs:

docker-compose logs -f app

Step 4: Multi-Stage Docker Builds: Minimalism for Production

Keep deploys svelte and secure. Stop shipping gigabytes of build tools. A multi-stage Dockerfile (same example, Node.js):

FROM node:18.18-alpine AS builder

WORKDIR /usr/src/app

COPY package*.json ./

RUN npm ci

COPY . .

RUN npm run build

FROM node:18.18-alpine AS runtime

WORKDIR /usr/src/app

COPY package*.json ./

RUN npm ci --omit=dev

COPY --from=builder /usr/src/app/dist ./dist

USER node

EXPOSE 3000

CMD ["node", "dist/index.js"]

Known Issue: Some libraries (node-gyp-built) require non-Alpine base at runtime. Test before deploying to production.

Step 5: Pipeline Integration

Integrate Docker builds and tests directly in CI/CD for reproducible deployments. Example: GitHub Actions configuration fragment:

jobs:

build_and_push:

runs-on: ubuntu-22.04

steps:

- uses: actions/checkout@v4

- name: Build Docker image

run: docker build -t myapp:${{ github.sha }} .

- name: Run tests inside container

run: docker run --rm myapp:${{ github.sha }} npm test

- name: Push to registry

env:

DOCKER_PASSWORD: ${{ secrets.DOCKER_PASSWORD }}

DOCKER_USERNAME: ${{ secrets.DOCKER_USERNAME }}

run: |

echo "$DOCKER_PASSWORD" | docker login -u "$DOCKER_USERNAME" --password-stdin

docker tag myapp:${{ github.sha }} myrepo.com/myapp:${{ github.sha }}

docker push myrepo.com/myapp:${{ github.sha }}

# Deployment steps intentionally omitted.

# Error handling: "Error response from daemon: pull access denied" typically means wrong registry or missing login step.

Step 6: Externalize Configuration (Override and .env Patterns)

Never bake secrets or environment-specific data into images.

.env:

MONGO_URL=mongodb://mongo:27017/main

NODE_ENV=development

PORT=3000

docker-compose.override.yml example:

services:

app:

env_file:

- .env

To override for staging/production:

docker-compose -f docker-compose.yml -f docker-compose.prod.yml up

Side Notes

- Alternative: Some teams adopt Tilt or Skaffold for hot-reloading local clusters. Worth considering for multi-service setups.

- Volume Cleanup: Old data, especially from stateful services (Postgres, Mongo), can persist across runs. Use

docker volume ls/pruneroutinely. - Image bloat: To inspect image size, use

docker images --format "table {{.Repository}}\t{{.Tag}}\t{{.Size}}".

Why This Works

By unifying dev, test, and deploy environments, Docker builds trust in every stage. Teams observe fewer pipeline reversals due to “invisible” infrastructure changes. Developers cut onboarding from hours to minutes. Debugging inside containers resembles production far more closely.

But not perfection—sometimes, network file systems or OS-level subtleties still emerge. Stay alert. Automated checks and regular pruning keep the system healthy.

Actual mono-repo adoption has its own quirks; Docker helps, but orchestration (k8s, ECS) is still needed for production-scale multi-service deployments.

Questions on non-Node stacks (Python, Go, .NET), multi-container orchestration, or handling persistent database snapshots? There’s no single “best pattern”, but these techniques provide a robust, portable baseline.