Integrating Docker Seamlessly into Your Existing Project Workflow

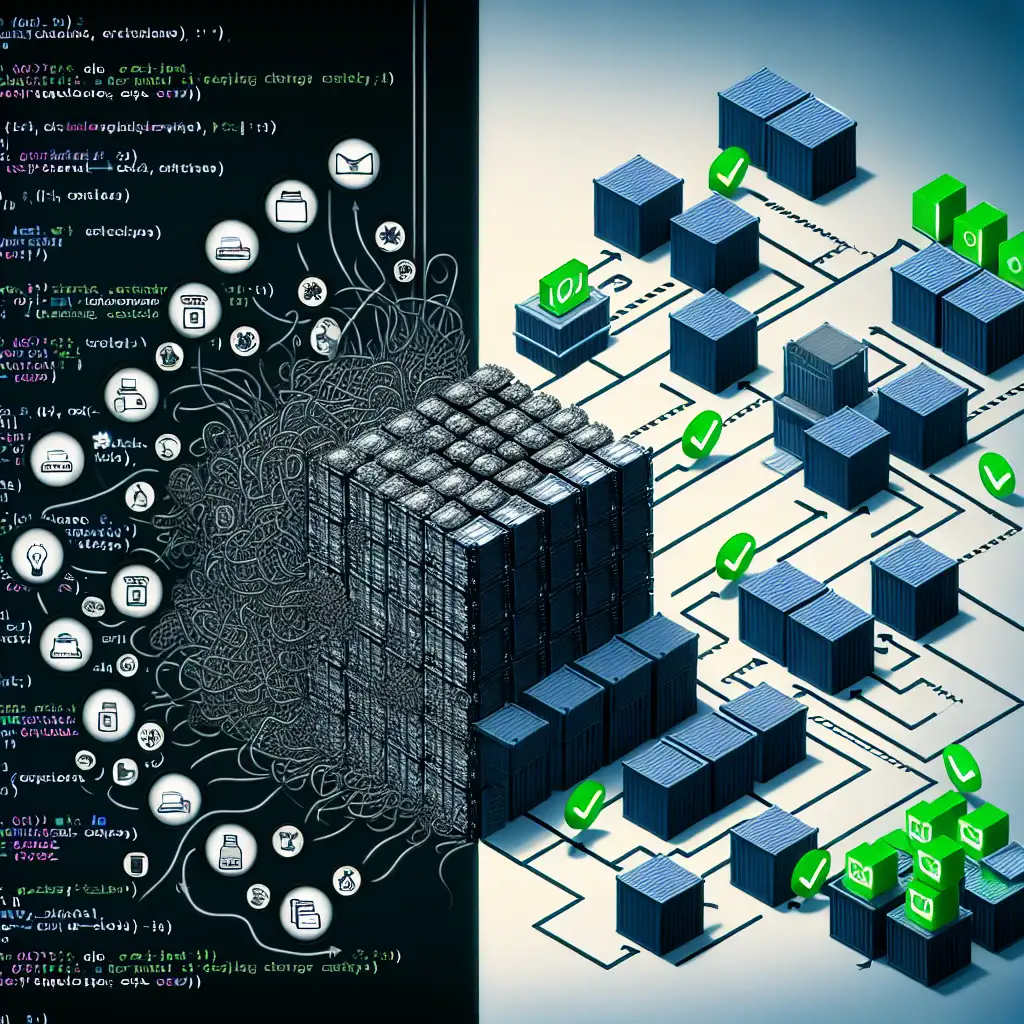

Most developers think adding Docker is about containerizing apps alone. The real power lies in embedding Docker deeply into your workflow to transform collaboration and deployment, not just packaging. Adding Docker to your project streamlines development, testing, and deployment by ensuring consistent environments across all stages, drastically reducing the dreaded "it works on my machine" problems.

In this post, I’ll walk you through a practical approach to integrating Docker smoothly into your existing project workflow so you can enjoy faster development cycles, reliable testing environments, and effortless deployments.

Why Integrate Docker Beyond Containerizing?

Before diving into the how-to, it’s helpful to clarify why just "dockerizing" your app isn’t enough.

- Consistency Across Machines: Developers and CI/CD run the app inside identical containers.

- Simplified Setup: New team members spin up the whole environment with a single command.

- Reliable Testing: The test environment matches production closely.

- Easier Deployment: Same container runs locally, in staging, and production with minimal differences.

Embedding Docker deeply means making it part of how you develop, test, and deploy — not just building an image once in a while.

Step 1: Start With a Solid Dockerfile

A clean and efficient Dockerfile is your foundation. Here’s a sample Node.js app Dockerfile that you can adapt:

# Use official Node.js LTS image as a base

FROM node:18-alpine

# Create app directory in the container

WORKDIR /usr/src/app

# Copy package files first (for caching)

COPY package*.json ./

# Install dependencies

RUN npm install --only=production

# Copy app source code

COPY . .

# Expose the port your app runs on

EXPOSE 3000

# Run the application

CMD ["node", "index.js"]

Tips:

- Separate dependency installation from copying source files for better layer caching.

- Use lightweight base images like alpine-based ones for compact containers.

- Keep environment-specific configurations outside the image via env variables or mounted files.

Step 2: Define Your Development Environment with docker-compose

Developers often need more than just the main app container — they might depend on databases, caches, or other services. Enter docker-compose.

Create a docker-compose.yml at your project root:

version: '3.8'

services:

app:

build: .

volumes:

- .:/usr/src/app # Mount current dir for live code reloads

- /usr/src/app/node_modules

ports:

- "3000:3000"

environment:

NODE_ENV: development

command: npm run dev # Run with nodemon or similar live-reload tool

mongo:

image: mongo:6.0-alpine

ports:

- "27017:27017"

volumes:

- mongo-data:/data/db

volumes:

mongo-data:

Why this helps:

- You run both your app and dependent services in containers.

- Live code changes immediately reflect without rebuilding images (via volume mounts).

- Everyone on your team uses the same environment configuration file.

Step 3: Simplify Development Commands With npm Scripts (or Makefile)

Make using Docker natural by wrapping common commands into scripts.

In package.json:

"scripts": {

"docker:start": "docker-compose up",

"docker:stop": "docker-compose down",

"docker:test": "docker exec -it <app_container_name> npm test"

}

Replace <app_container_name> with the actual container name or automate grabbing it via docker-compose ps.

Or use a simple shell script or Makefile:

.PHONY: up down test logs

up:

docker-compose up -d

down:

docker-compose down

test:

docker exec -it $(shell docker-compose ps -q app) npm test

logs:

docker-compose logs -f

This lowers friction so running containers feels as natural as running local servers.

Step 4: Use Multi-stage Builds for Production Images

To keep your production images lean and secure, leverage multi-stage builds:

# Builder stage to install dev dependencies and build assets

FROM node:18-alpine AS builder

WORKDIR /usr/src/app

COPY package*.json ./

RUN npm install

COPY . .

RUN npm run build # e.g., compile TypeScript or bundle frontend assets

# Final stage for production runtime

FROM node:18-alpine AS production

WORKDIR /usr/src/app

COPY package*.json ./

RUN npm install --only=production

COPY --from=builder /usr/src/app/dist ./dist # Copy built assets

EXPOSE 3000

CMD ["node", "dist/index.js"]

This helps maintain quick deploy times without shipping unnecessary dev tools or files.

Step 5: Integrate With Your CI/CD Pipeline

Once local development works flawlessly inside Docker containers, update your pipeline config (GitHub Actions, Jenkins, GitLab CI) to:

- Build images using the same

Dockerfile. - Run tests inside containers matching development & production.

- Push tested images to a registry.

- Deploy these consistent images to staging/production servers or orchestration platforms.

For example, in GitHub Actions:

jobs:

build-test-deploy:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Build Docker Image

run: docker build -t myapp:${{ github.sha }} .

- name: Run Tests Inside Container

run: |

docker run --rm myapp:${{ github.sha }} npm test

- name: Push Image to Registry

run: |

echo "${{ secrets.DOCKER_PASSWORD }}" | docker login -u ${{ secrets.DOCKER_USERNAME }} --password-stdin

docker tag myapp:${{ github.sha }} myrepo/myapp:${{ github.sha }}

docker push myrepo/myapp:${{ github.sha }}

# Add deployment steps here...

Step 6 (Bonus): Use .env Files and Compose Overrides for Environment Configs

Avoid baking config values directly into images—use .env files along with docker-compose overrides:

.env

DATABASE_URL=mongodb://mongo:27017/mydb

NODE_ENV=development

PORT=3000

docker-compose.override.yml

services:

app:

env_file:

- .env

For production override (docker-compose.prod.yml) swap configs as needed.

Wrapping It Up

Integrating Docker seamlessly means making it a natural part of every developer’s day—from cloning your repo through testing changes and deploying updates. Your workflow becomes more predictable, manageable, and collaborative.

Key takeaways to integrate smoothly now:

- Write carefully optimized

Dockerfiles focused on both dev flexibility and prod efficiency. - Use

docker-composeto manage linked services with volume mounts for live-reload during development. - Wrap commands so developers don’t have to memorize complex Docker invocations.

- Leverage multi-stage builds for clean production images.

- Embed Docker in CI/CD pipelines to guarantee consistency end-to-end.

- Handle config sensitively with env vars and overrides rather than baking them into images.

Give these steps a shot on your next project or even an existing one—you’ll be amazed how quickly everyone feels more confident shipping software that truly “just works”.

If you want me to share example repos or detailed configs tailored to specific stacks like Python/Django or React/Node, just drop a comment! Happy Dockering! 🚢🐳