Efficiently Exporting AWS RDS Data to S3 Using Native Tools and Automation

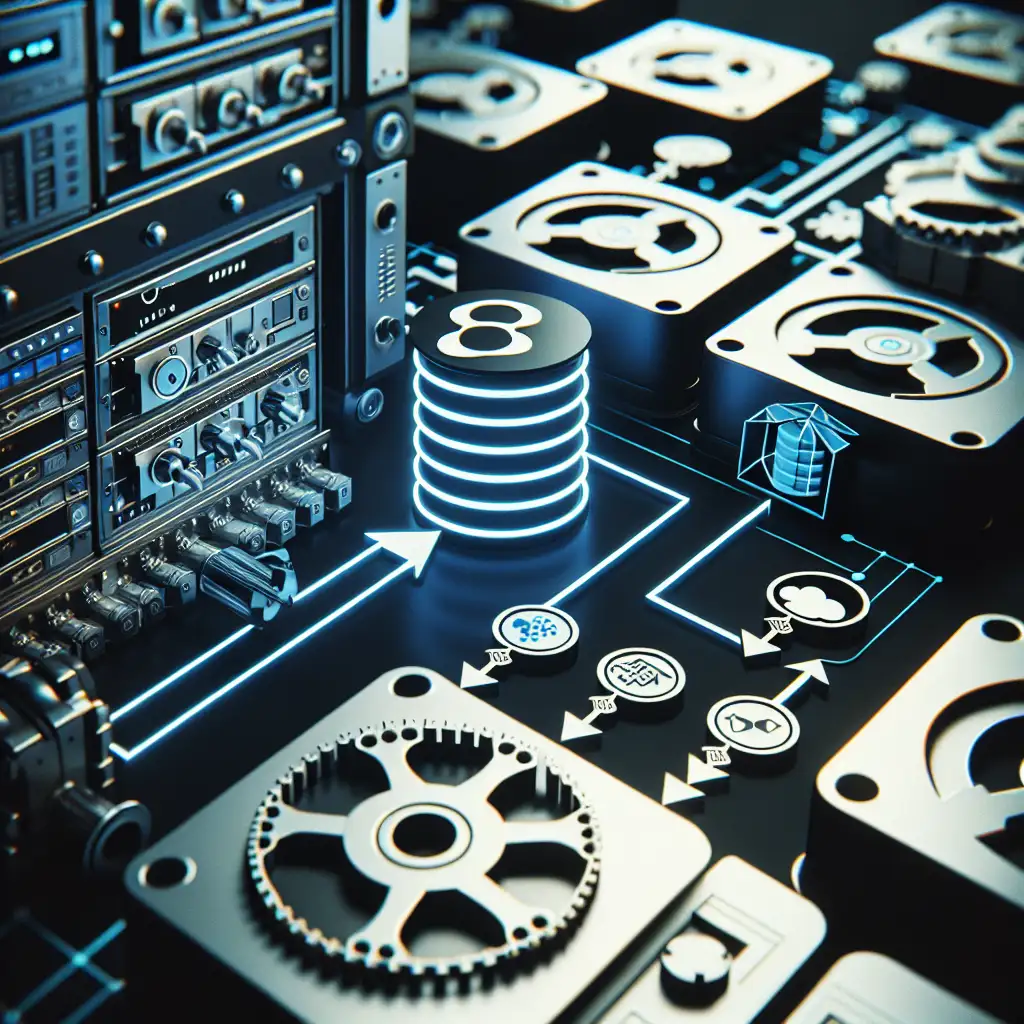

Forget complicated ETL pipelines—here’s how leveraging AWS's built-in capabilities with simple automation scripts can transform your RDS to S3 data transfers, saving time and money while maintaining data integrity.

Why Export Data from RDS to S3?

If you’re managing relational databases in AWS RDS, chances are you need to move data out regularly—whether for backups, analytics, or integration with other systems. Exporting RDS data to Amazon S3 is a cost-effective way to:

- Offload storage to cheaper object storage.

- Create snapshots for point-in-time recovery.

- Feed downstream analytics or machine learning workflows.

- Share data across accounts or applications.

Manually exporting and moving files is repetitive and error-prone. Automating this not only saves time but also helps scale your workloads reliably.

Native Tools for Moving Data from RDS to S3

AWS offers several native tools and services that simplify exporting your RDS database objects into S3:

1. RDS Snapshot Export to S3 (For Aurora & MySQL/PostgreSQL)

AWS provides a feature called Exporting RDS snapshots to Amazon S3. This exports snapshot data as Parquet files directly into an S3 bucket.

Pros:

- No custom coding needed.

- Parquet format is compressed and optimized for analytics.

- Supports AWS Glue integration out of the box.

Prerequisites:

- Supported engines: Aurora (PostgreSQL/MySQL), MySQL 5.7+.

- IAM roles with required permissions.

Example CLI command:

aws rds start-export-task \

--export-task-identifier my-export-task \

--source-arn arn:aws:rds:region:account-id:snapshot:snapshot-id \

--s3-bucket-name my-rds-export-bucket \

--iam-role-arn arn:aws:iam::account-id:role/MyRDSSnapshotExportRole \

--kms-key-id arn-of-kms-key

2. Data Dump via mysqldump or pg_dump to S3

If you run standard MySQL or PostgreSQL databases on RDS (not Aurora), you can export the database using native DB tools — mysqldump or pg_dump — then upload the dump file directly to an S3 bucket.

This method works well for smaller datasets or where snapshot exports aren’t supported.

Steps in brief:

- Connect to the RDS instance using an EC2 instance or your local machine.

- Run a dump command:

mysqldump -h your-rds-endpoint -u username -p dbname > dump.sql - Upload dump to S3:

aws s3 cp dump.sql s3://my-bucket-name/backups/dump-$(date +%F).sql

Automating the Export Process with AWS Lambda and CloudWatch

To make all this seamless, automate exports on a schedule:

Example Automation Flow:

- Create a Lambda function that triggers

start-export-taskCLI commands or runs dump-and-upload scripts (for non-Aurora). - Schedule exports using CloudWatch Events / EventBridge rules (e.g., nightly).

- Set up IAM roles so Lambda has permissions for both RDS snapshot export & S3 write access.

- Enable CloudWatch Logs within Lambda for monitoring success/failures.

Sample Python Lambda Snippet for Snapshot Exporting Aurora Snapshot

import boto3

import os

from datetime import datetime

rds_client = boto3.client('rds')

def lambda_handler(event, context):

snapshot_arn = os.environ['SNAPSHOT_ARN']

s3_bucket = os.environ['S3_BUCKET']

iam_role_arn = os.environ['EXPORT_ROLE_ARN']

kms_key_id = os.environ.get('KMS_KEY_ID') # optional

export_task_id = f"export-task-{datetime.utcnow().strftime('%Y%m%d%H%M%S')}"

params = {

'ExportTaskIdentifier': export_task_id,

'SourceArn': snapshot_arn,

'S3BucketName': s3_bucket,

'IamRoleArn': iam_role_arn,

}

if kms_key_id:

params['KmsKeyId'] = kms_key_id

response = rds_client.start_export_task(**params)

print(f"Started export task {export_task_id}")

return response

You’d configure environment variables with the relevant ARNs, bucket names, etc., then deploy this Lambda and set a CloudWatch Event rule triggering it nightly.

Bonus: Using AWS Data Pipeline or Step Functions for More Complex Workflows

For more complicated ETL workflows involving transformation, validation, or multiple exports across databases, you might consider:

- AWS Data Pipeline: Schedule jobs and chain together export tasks.

- AWS Step Functions: Orchestrate Lambda functions and manual approvals combined with retry logic.

But if your goal is straightforward export-to-S3 automation, native snapshot exports + simple Lambda automation should suffice.

Final Tips for Successful Automation

- Use IAM least privilege: Give roles only required permissions (

rds:StartExportTask,s3:PutObject, etc.). - Leverage versioned buckets: So older exports are retained safely without overwrites.

- Monitor job status: Use CloudWatch Logs/metrics plus SNS alerts for failures.

- Test incremental exports: Start with small datasets before scaling up large DB snapshots.

Summary

Moving your AWS RDS data over to Amazon S3 doesn’t have to mean building complicated ETL pipelines or relying on third-party tools. By harnessing AWS’s native snapshot export features coupled with automation powered by Lambda and CloudWatch Events, you can:

- Cut down manual efforts and errors.

- Ensure timely backups/export deliveries.

- Provide ready-to-analyze datasets in optimized formats like Parquet.

Start simple — configure export permissions, build a tiny automation script, then schedule it consistently—to get scalable cloud-native data management running today!

Happy exporting!

If you found this walkthrough useful, feel free to share or comment below about your experiences automating AWS RDS exports!