Maximizing AWS Athena Query Results Efficiency by Automating Exports to S3

Why settle for manual downloads? Automate Athena-to-S3 exports to supercharge your data pipeline and reclaim precious engineering time.

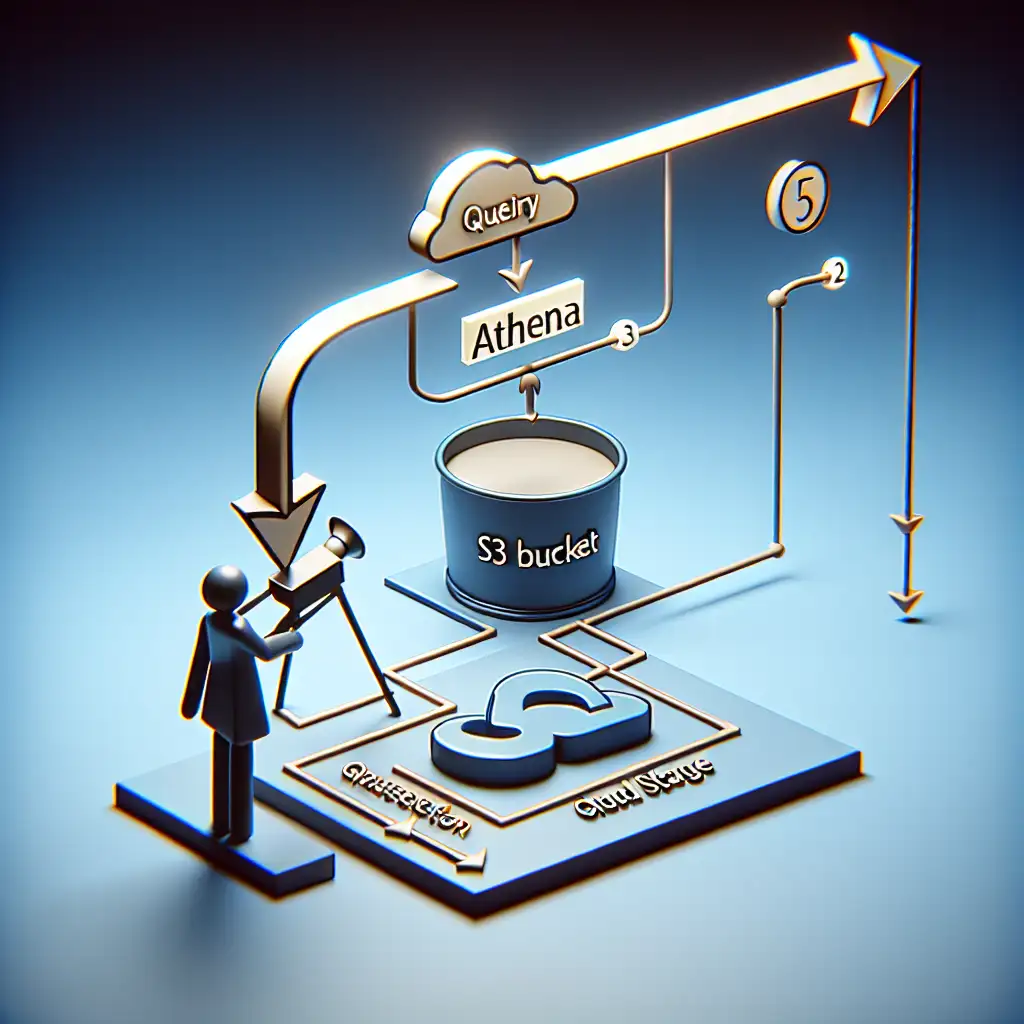

If you regularly run queries in AWS Athena, you know how valuable those results can be—whether you're analyzing trends, generating reports, or feeding data into downstream processes. But manually downloading those query results each time can quickly become a tedious bottleneck. Imagine a workflow where your query outputs are automatically exported to Amazon S3, making your data instantly accessible for further processing, archiving, or analytics.

In this practical guide, I’ll walk you through why automating exports from Athena to S3 is a game-changer for efficiency—and exactly how you can set it up in your own environment.

Why Automate Athena Query Result Exports?

Before diving into the how, let’s be clear on the why:

-

Streamlined Data Workflows: When Athena query results land directly in an S3 bucket, there is no need to manually download and then re-upload or move files, eliminating friction between querying and downstream tasks.

-

Cost Efficiency: Storing results on S3 leverages its scalable, low-cost storage. You’re not paying for needless intermediate transfers or depending on Athena's temporary query result storage.

-

Seamless Integration: Many AWS analytics and data tools (e.g., Glue, Redshift Spectrum, EMR) can easily access data stored in S3. Automating exports builds a solid foundation for these integrations.

-

Reclaim Engineering Time: Automation frees your team from repetitive manual tasks so they can focus on insights and development rather than file wrangling.

How to Automate Athena Query Exports to S3

1. Set Up an Appropriate Output Location in S3

Athena outputs query results by default to a specified S3 bucket location configured in its settings. You can define this location when running queries programmatically or via the console.

To keep things organized:

-

Create a dedicated bucket or prefix (folder) like

s3://my-athena-query-results/exports/. -

Set permissions granting Athena access to write objects into this bucket.

2. Use the AWS SDK or CLI to Run Queries Programmatically

You can use SDKs (Python’s boto3 is popular) or AWS CLI commands to execute Athena queries with the output location set explicitly.

Example Using Python boto3:

import boto3

import time

athena_client = boto3.client('athena')

def run_athena_query(query, database, output_location):

response = athena_client.start_query_execution(

QueryString=query,

QueryExecutionContext={'Database': database},

ResultConfiguration={'OutputLocation': output_location}

)

return response['QueryExecutionId']

def wait_for_query(athena_client, execution_id):

while True:

result = athena_client.get_query_execution(QueryExecutionId=execution_id)

status = result['QueryExecution']['Status']['State']

if status in ['SUCCEEDED', 'FAILED', 'CANCELLED']:

return status

time.sleep(2)

query = "SELECT * FROM sales_data LIMIT 10;"

database = "my_database"

output_location = "s3://my-athena-query-results/exports/"

query_execution_id = run_athena_query(query, database, output_location)

final_status = wait_for_query(athena_client, query_execution_id)

if final_status == 'SUCCEEDED':

print(f"Query completed successfully! Results saved at: {output_location}{query_execution_id}.csv")

else:

print(f"Query failed with status: {final_status}")

With this snippet:

- The query runs asynchronously.

- Its output is saved directly under the specified folder with a file named after the execution ID.

- You get programmatic control and notification around query completion.

3. Automate with Lambda and Event Triggers (Optional Advanced Step)

If you want an event-driven workflow:

-

Trigger AWS Lambda function when a new object appears in the export bucket (via S3 event notification).

-

Lambda can launch additional processing steps (e.g., loading results into Redshift or triggering alerts).

This setup fully automates your pipeline from query submission through post-processing—no human intervention required!

Best Practices and Tips

-

Use Partitioned Tables & Compressed Formats: If exporting large datasets regularly, partitioned tables plus compressed file formats like Parquet reduce storage and speed up downstream reads.

-

Clean Up Old Results: Implement lifecycle policies on your results bucket or automate cleanup scripts so storage doesn’t balloon endlessly.

-

Secure Your Buckets: Limit write/read access only as needed using IAM policies and bucket policies.

Summary

Automating exports of AWS Athena query results into Amazon S3 dramatically improves efficiency by removing manual steps from your data workflows. Whether through simple SDK-driven scripts or full event-based pipelines leveraging Lambda, you unlock faster iteration cycles, easier integration with analytic tools, and lower operational overhead.

Try incorporating this automation in your next project—you’ll wonder how you ever managed without it!

If you want me to share a full example repo script or help with a custom automation pattern tailored for your workload just drop me a comment below!