Optimizing Data Migration from RDS to Snowflake for Real-Time Analytics

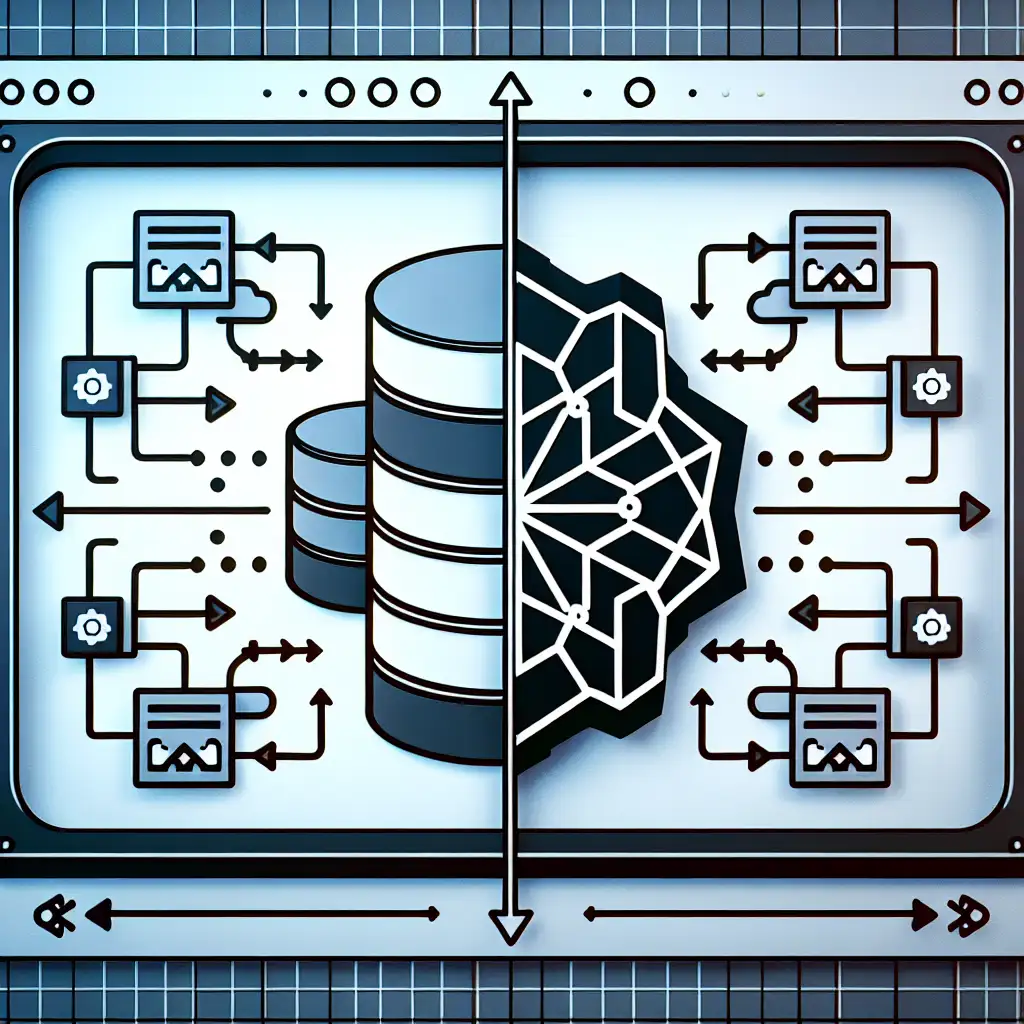

Most believe moving from RDS to Snowflake is a straightforward lift-and-shift process. The contrarian view: Without deliberate architectural and pipeline optimizations, you risk creating data silos and losing insights—making the migration an opportunity, not just a task. Migrating data from traditional RDS systems (like Amazon RDS for MySQL, PostgreSQL, or SQL Server) to Snowflake opens the door to scalable cloud data warehousing and accelerated analytics, but only if done thoughtfully.

In this post, I’ll walk you through practical, hands-on steps to optimize your data migration from RDS to Snowflake—ensuring minimal downtime and maximum value extraction.

Why Migrate from RDS to Snowflake?

Before diving into optimization techniques, let’s briefly clarify why this migration matters:

- Scalability: Snowflake scales compute and storage independently.

- Performance: Snowflake’s MPP engine accelerates complex queries.

- Real-Time Analytics: Combining streaming and batch data becomes far easier.

- Seamless Integration: Works well with modern BI tools and pipelines.

All these benefits come at a cost: the migration can be complex. So optimizing it is key.

Step 1: Assess Your Current RDS Schema & Workloads

Start by analyzing:

- Schema complexity: Are your tables normalized or denormalized? Snowflake works best with well-designed schemas but can handle semi-structured data easily.

- Data volumes: Size impacts migration time and tool selection.

- Query patterns: Identify which reports or dashboards require near-real-time data.

Example: If your RDS schema has many normalized tables joined frequently in reports, consider using Snowflake’s micro-partitioning with materialized views for fast performance post-migration.

Step 2: Choose the Right Migration Tools & Methods

Options include:

- Snowpipe + CDC (Change Data Capture): Ideal for near-real-time sync. For example, use AWS DMS (Database Migration Service) with CDC enabled to stream changes into Snowflake via S3 buckets and Snowpipe ingestion.

- Bulk Export/Import: Useful for initial full load. Export RDS tables as CSV/parquet files, stage them in S3, then load them into Snowflake.

- ETL/ELT Pipelines (Airflow, dbt): Transform data before loading to suit analytics needs.

Tip: Combining a bulk initial load with incremental CDC updates reduces downtime while keeping data fresh.

Step 3: Optimize Data Loading Strategies

To avoid bottlenecks during load:

- Use compressed file formats like Parquet or Avro when exporting from RDS — smaller file sizes speed up transfer.

- Partition exported files logically (e.g., by date or region) so loaders can parallelize ingestion.

- Adjust batch size in your ETL pipeline to balance latency vs stability.

Step 4: Align Data Models for Performance & Cost Efficiency

Snowflake differs from an OLTP system like RDS:

- Flatten complex joins where possible; exploit semi-structured storage using VARIANT data type for JSON-like objects.

- Use clustering keys on frequently queried columns — unlike traditional indexing, this optimizes pruning micro-partitions during query execution.

Example: Ingest application event logs as JSON directly into VARIANT columns rather than relationally normalized rows. This reduces complexity and leverages semi-structured query power in Snowflake.

Step 5: Implement Real-Time Analytics Pipelines

True “real-time” means near-zero latency between source changes in RDS and availability in Snowflake analytics layers.

A sample architecture:

- Enable CDC on RDS using AWS DMS or Debezium.

- Stream change logs into an S3 bucket partitioned by timestamp.

- Set up Snowpipe to automatically ingest these files continuously into a staging table in Snowflake.

- Transform and merge staged changes into production tables via tasks/scheduled procedures inside Snowflake for near real-time freshness.

Example Migration Scenario

Suppose you have an e-commerce app running on Amazon RDS MySQL storing orders and customers:

- Export orders/customers tables as daily CSVs during off-hours → upload to S3 → load into base tables on Snowflake as a historical snapshot.

- Configure AWS DMS with CDC enabled for these tables → streaming new inserts/updates/deletes → output parquet files onto S3 every few minutes.

- Trigger Snowpipe on new parquet files → incremental ingestion into staging tables.

- Use SQL MERGE statements in Snowflake tasks running every minute to merge staging changes with main analytics tables.

- Query these optimized datasets with BI tools like Tableau or Looker for near real-time order analytics dashboards.

Final Tips

- Always run tests on sample datasets before committing large production migrations.

- Build monitoring around your pipeline (Glue jobs + CloudWatch logs / Task history in Snowflake) to catch failures instantly.

- Budget time for iterative performance tuning — both network throughput during loads and query tuning inside Snowflake matter deeply.

Migrating from RDS to Snowflake isn’t “lift-and-shift.” Instead, it’s a strategic opportunity to rethink your data architecture for speed and scale—maximizing business value while minimizing disruption. With careful planning and optimization of pipelines, schema design, and loading techniques, you can unlock truly real-time analytics that fuel smarter decisions every day.

If you’re facing a migration project right now or want advice tailored to your stack — drop me a comment below! Happy migrating!