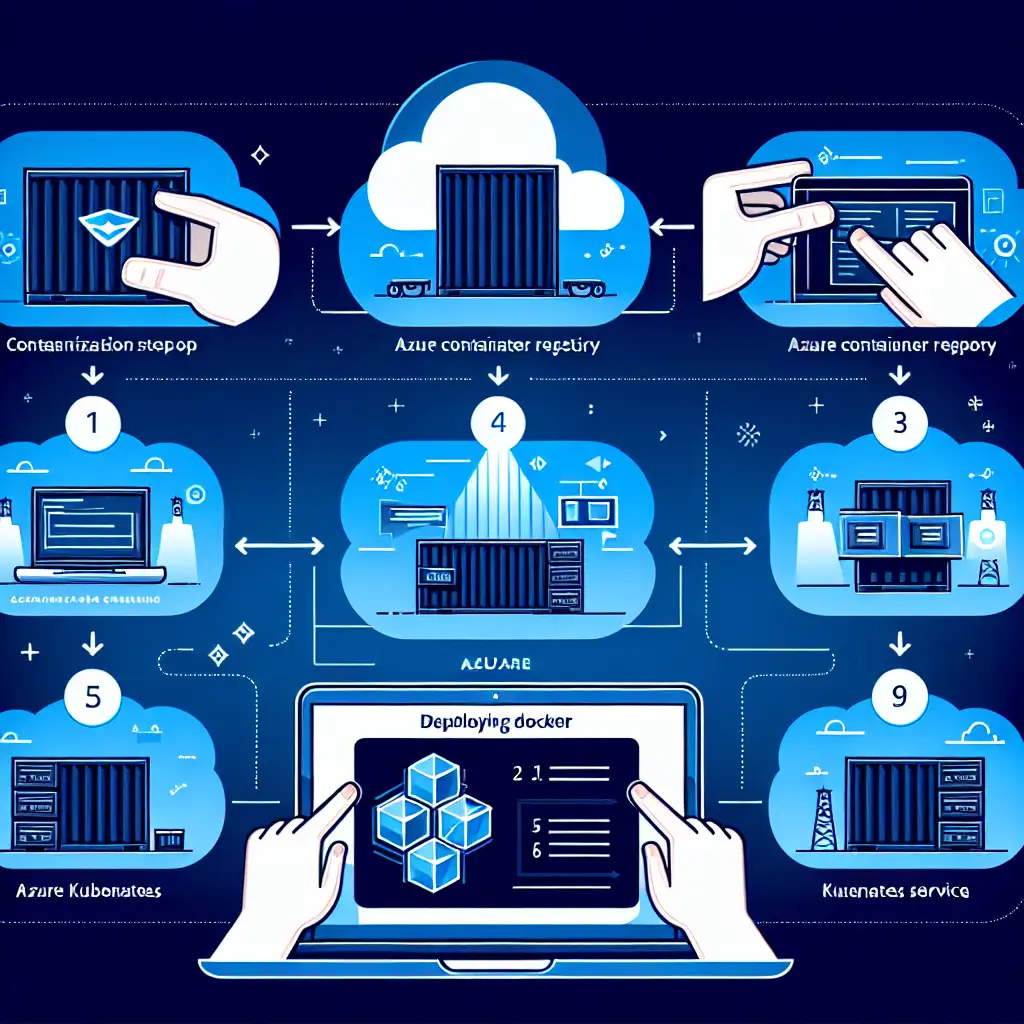

Mastering Seamless Docker Container Deployment to Azure: Beyond the Basics

Enterprises rarely run a single container in the cloud. Real-world workloads demand orchestration, automation, and observability—sometimes from day one. Not surprisingly, basic "docker run" approaches to Azure rarely pass the first real incident.

Where do problems start?

- Scaling microservices on demand (Monday traffic spike, anyone?)

- Secure, private image distribution

- In-cluster log correlation after a 2 a.m. outage

The workflows below actually work for production, with code samples, pitfalls, and settings that matter.

Containerization: Multi-Stage Builds for Production

Jumping directly to the point: smaller container images mean both security and deployment speed. Multi-stage Dockerfiles are non-negotiable.

Example: Production-ready Node.js multi-stage build.

# Build dependencies and assets

FROM node:18.16-alpine3.18 AS build

WORKDIR /app

COPY package*.json ./

RUN npm ci --only=production

COPY . .

RUN npm run build

# Final image

FROM node:18.16-alpine3.18

WORKDIR /app

COPY --from=build /app .

USER node

EXPOSE 3000

CMD ["node", "dist/index.js"]

Note: The separation of build and runtime contexts drastically reduces the attack surface and image size.

Gotcha: Some npm dependencies with native extensions may require glibc-based images instead of Alpine.

Registry: Private Azure Container Registry (ACR) Setup

Move away from public registries (compliance, latency, security).

Provision ACR:

az acr create \

--resource-group my-rg-prod \

--name myacrprod \

--sku Premium

Authenticate Docker to ACR:

az acr login --name myacrprod

Tag and push:

docker tag myapp:latest myacrprod.azurecr.io/myapp:1.0.0

docker push myacrprod.azurecr.io/myapp:1.0.0

Known Issue: Permissions in ACR can be tricky—Service Principals may need explicit role assignments for CI/CD.

Orchestration: Deploying to Azure Kubernetes Service (AKS)

Bare containers aren’t enough. Start with a minimal AKS cluster, but beware: default node pools are Standard_DS2_v2 unless specified.

az aks create \

--resource-group my-rg-prod \

--name myakscluster \

--node-count 3 \

--enable-addons monitoring \

--generate-ssh-keys \

--node-vm-size Standard_DS3_v2

Kubeconfig:

az aks get-credentials --resource-group my-rg-prod --name myakscluster

Multi-Container Kubernetes Deployment Example

deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-logger

spec:

replicas: 3

selector:

matchLabels:

app: web-logger

template:

metadata:

labels:

app: web-logger

spec:

containers:

- name: web

image: myacrprod.azurecr.io/myapp:1.0.0

ports:

- containerPort: 3000

env:

- name: NODE_ENV

value: "production"

- name: logger

image: myacrprod.azurecr.io/logger:1.0.0

ports:

- containerPort: 514

imagePullSecrets:

- name: acr-auth

Manual step: Create the acr-auth Kubernetes secret on new clusters.

kubectl create secret docker-registry acr-auth \

--docker-server=myacrprod.azurecr.io \

--docker-username=$(az acr credential show -n myacrprod --query username -o tsv) \

--docker-password=$(az acr credential show -n myacrprod --query passwords[0].value -o tsv)

Deploy:

kubectl apply -f deployment.yaml

Expose with a public load balancer:

kubectl expose deployment web-logger --type=LoadBalancer --port=80 --target-port=3000

Trade-off: Azure LoadBalancer assignment can take 1–2 minutes; internal services need internal: true annotation.

Autoscaling: Efficient Use of Compute

Automatic scaling underpins cost and SLOs. Let Azure handle the node scaling, but drive Pod replicas with HPA.

Install Metrics Server (required for HPA):

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

Configure HPA:

kubectl autoscale deployment web-logger \

--cpu-percent=65 --min=3 --max=12

Non-obvious tip: HPA only tracks CPU/MEM by default. For custom metrics (e.g., queue length), extend with Prometheus Adapter.

CI/CD Integration: Building, Pushing, and Deploying Automatically

Real production: Every commit triggers automated container build, registry push, and AKS deploy.

Sample Azure Pipelines YAML fragment:

trigger:

- main

pool:

vmImage: 'ubuntu-22.04'

steps:

- task: Docker@2

displayName: Build and Push app

inputs:

command: buildAndPush

repository: myacrprod.azurecr.io/myapp

dockerfile: 'Dockerfile'

tags: latest

containerRegistry: $(ACR_SERVICE_CONNECTION)

- script: |

kubectl apply -f deployment.yaml

displayName: Deploy to AKS

Note: Remember to inject image tags via environment variables to avoid stale deployments.

Observability: Monitoring and Logging

Skip this, and unresolved failures at 3 a.m. are inevitable.

- Enable Azure Monitor for containers:

Agent auto-installs with--enable-addons monitoring, but customization (log retention, workspace selection) is via the Azure Portal or CLI. - Query logs:

In Log Analytics, typical query:KubePodInventory | where ContainerName == "web" | order by TimeGenerated desc | take 50 - Set up alerts:

Unready pod threshold, excessive restarts, or memory constraints.

Side effect: Enabling extra diagnostic logs may marginally impact cluster performance; always test in staging first.

Final Considerations

Deploying Docker containers to Azure at production scale starts with deliberate image hygiene and finishes with observability and automation. Kubernetes isn't always simple, but with proper ACR integration, HPA tuning, and end-to-end deployment pipelines, operational issues become manageable—not surprises.

ASCII diagram for context:

[Source Repo] --push--> [Azure DevOps] --build/push--> [ACR] --pull--> [AKS Nodes] --serve--> [Users]

For persistent workloads, think beyond stateless models—Azure Files with CSI drivers or managed database services can be integrated, but those require their own tuning.

Practical tip: Node pool upgrades aren’t completely zero-downtime unless you use PodDisruptionBudgets and readiness/liveness probes correctly.

Need a working Helm chart or persistent storage example? That’s a larger discussion. For straightforward stateless microservice deployments, the process above remains robust—and mostly future-proof.