Mastering Seamless Docker Container Deployment to Azure: Beyond the Basics

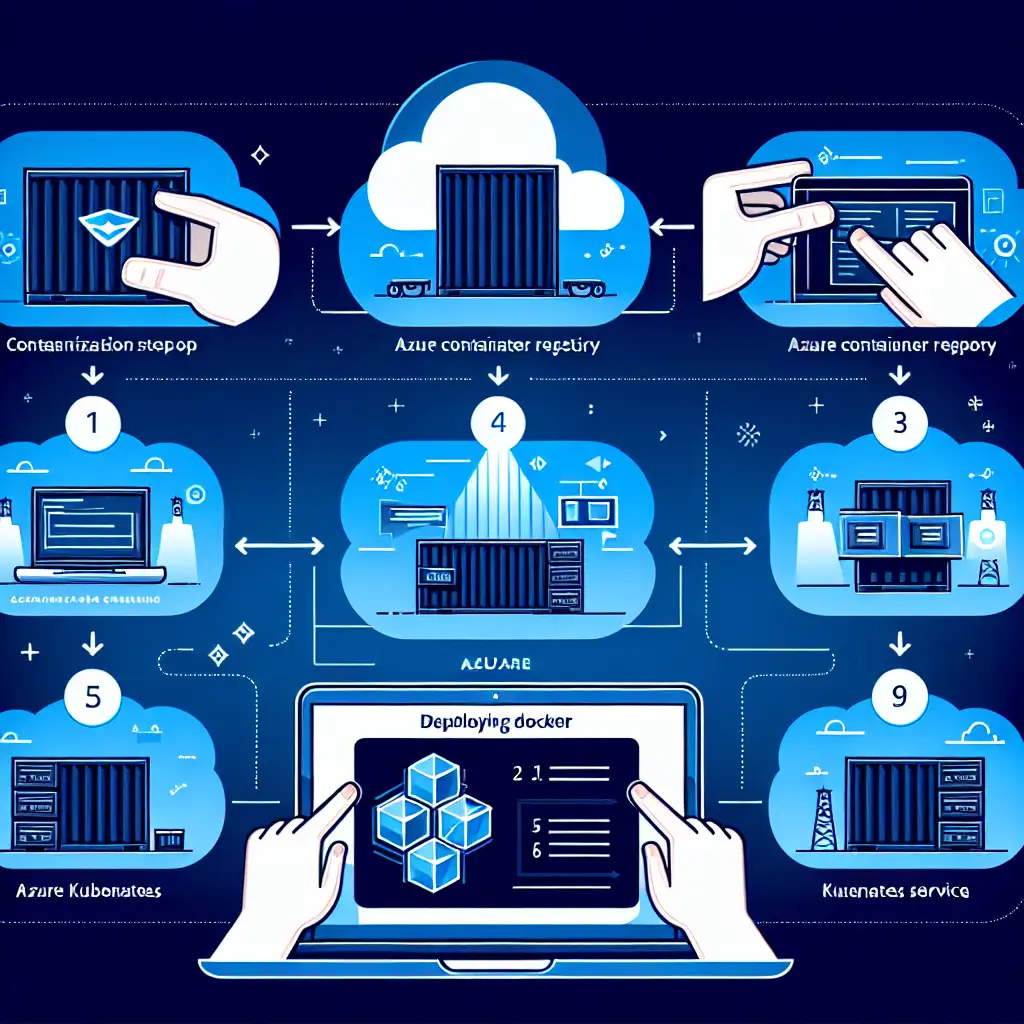

Efficiently deploying Docker containers to Azure is crucial for leveraging cloud capabilities, ensuring scalable, reliable applications that meet modern business demands. While many guides cover basic container deployment, real-world scenarios require deeper expertise — from orchestrating multi-container apps to optimizing costs and scaling dynamically.

In this post, we’re breaking the mold by diving into advanced deployment strategies for Docker containers on Azure. Whether you’re managing complex microservices or looking to streamline your DevOps workflow, this practical guide will help you go beyond the basics and truly master container deployment on Azure.

Why Go Beyond Basic Docker to Azure Deployment?

Most tutorials get you started with deploying single containers to Azure App Service or Azure Container Instances (ACI). That’s great for simple proof-of-concepts but quickly falls short for production workloads that:

- Require multi-container apps working in unison

- Demand automated scaling based on load

- Need integration with Azure DevOps pipelines for CI/CD

- Optimize cloud costs without sacrificing performance

To tackle these demands, you’ll want to leverage Azure Kubernetes Service (AKS), Azure Container Registry (ACR), and other native Azure services — together allowing you to build robust, scalable, and maintainable containerized applications.

Step 1: Containerize Your Application The Right Way

Before deploying to Azure, ensure your application is properly containerized using multi-stage Dockerfiles. This results in smaller images and faster deployment.

Example: Multi-stage Dockerfile for Node.js app

# Build stage

FROM node:18-alpine AS build

WORKDIR /app

COPY package*.json ./

RUN npm install --production

COPY . .

RUN npm run build

# Production stage

FROM node:18-alpine

WORKDIR /app

COPY --from=build /app .

EXPOSE 3000

CMD ["node", "dist/index.js"]

This optimizes your image by separating dependencies installation, build steps, and runtime files.

Step 2: Push Images to Azure Container Registry (ACR)

Azure Container Registry is a private Docker registry that integrates tightly with Azure services.

- Create an ACR:

az acr create --resource-group myResourceGroup --name myContainerRegistry --sku Basic

- Login to ACR from your local machine:

az acr login --name myContainerRegistry

- Tag and push your local Docker image:

docker tag myapp mycontainerregistry.azurecr.io/myapp:v1

docker push mycontainerregistry.azurecr.io/myapp:v1

Storing images in ACR helps maintain security and makes your images easily accessible to Azure services like AKS.

Step 3: Deploy Multi-Container Apps Using Azure Kubernetes Service (AKS)

For advanced scenarios, AKS is your go-to managed Kubernetes offering on Azure. It handles container orchestration, ensuring high availability, load balancing, and scaling.

Quick AKS cluster setup:

az aks create --resource-group myResourceGroup --name myAKSCluster --node-count 3 --enable-addons monitoring --generate-ssh-keys

az aks get-credentials --resource-group myResourceGroup --name myAKSCluster

Create a Kubernetes deployment YAML for your multi-container app:

apiVersion: apps/v1

kind: Deployment

metadata:

name: multi-container-app

spec:

replicas: 3

selector:

matchLabels:

app: multi-container-app

template:

metadata:

labels:

app: multi-container-app

spec:

containers:

- name: web

image: mycontainerregistry.azurecr.io/myapp:v1

ports:

- containerPort: 3000

- name: sidecar-logger

image: mycontainerregistry.azurecr.io/logger:v1

ports:

- containerPort: 5000

Deploy it with:

kubectl apply -f deployment.yaml

Then expose it via a LoadBalancer service:

kubectl expose deployment multi-container-app --type=LoadBalancer --port=80 --target-port=3000

This sets up a resilient, scalable deployment for your multi-container app.

Step 4: Enable Autoscaling to Optimize Costs

To efficiently handle varying loads without waste, configure Horizontal Pod Autoscaler (HPA) in AKS.

kubectl autoscale deployment multi-container-app --cpu-percent=50 --min=3 --max=10

This instructs Kubernetes to maintain CPU usage around 50%, scaling pods between 3 and 10 based on demand, helping optimize Azure resource usage and cost.

Step 5: Integrate CI/CD With Azure DevOps Pipelines

Manual deployment can be tedious and error-prone. Automate your workflows by linking your Git repository, building Docker images, pushing to ACR, and updating AKS using Azure DevOps pipelines.

Sample Azure Pipelines YAML snippet to build and push Docker images:

trigger:

- main

pool:

vmImage: 'ubuntu-latest'

steps:

- task: Docker@2

inputs:

containerRegistry: 'myACRServiceConnection'

repository: 'mycontainerregistry.azurecr.io/myapp'

command: 'buildAndPush'

Dockerfile: '**/Dockerfile'

tags: |

$(Build.BuildId)

Add subsequent steps for applying Kubernetes manifests to AKS for a complete automation loop.

Step 6: Monitor and Troubleshoot Using Azure Monitor & Log Analytics

Finally, stay proactive by monitoring container health, logs, and resource consumption:

- Enable Azure Monitor for containers on your AKS cluster.

- Use Log Analytics workspace for querying container logs.

- Set up alerts for CPU spikes or pod failures.

This real-time insight keeps your deployments reliable and performant.

Conclusion

Deploying Docker containers to Azure is more than just running docker run in the cloud. Mastery means architecting multi-container applications with AKS, automating builds and deployments, intelligently scaling workloads, and leveraging Azure’s rich ecosystem for monitoring and security.

By going beyond basic deployments, you unlock the true power of Azure cloud to run scalable, resilient, and cost-effective containerized applications — ready for the rigors of modern production environments.

Start experimenting with these steps today and turn your Azure container deployments from simple demos into enterprise-grade cloud-native solutions.

Feel free to ask if you want a deep dive on any particular setup or if you want sample templates for CI/CD pipelines!