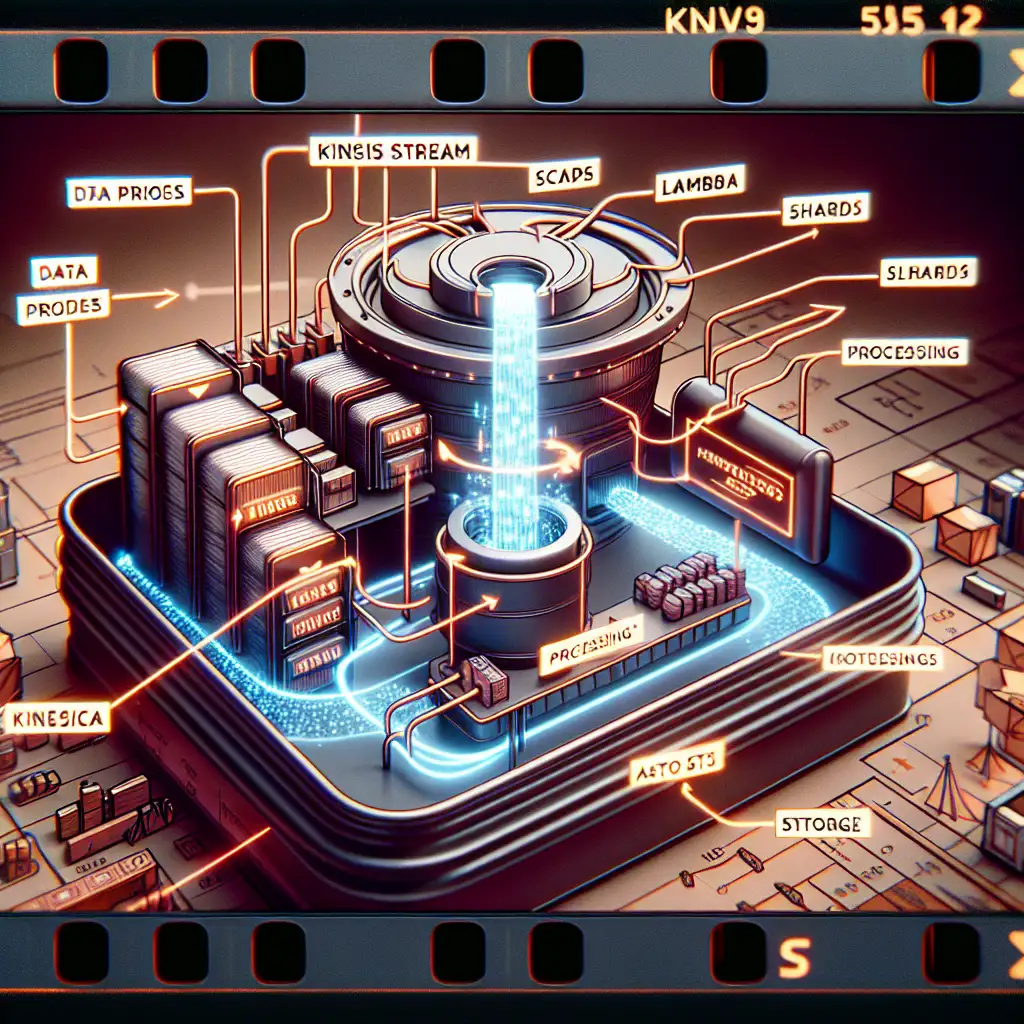

Architecting a Cost-Effective, Scalable AWS Kinesis-to-S3 Data Pipeline for Real-Time Analytics

Typical streaming architectures either hemorrhage budget through excessive over-provisioning or compromise reliability by gluing together brittle services. Consider a common scenario: high-throughput application logs, IoT telemetry, or web clickstreams needing near-real-time ingestion and efficient storage for later analysis. Designing a robust and cost-aware pipeline from AWS Kinesis Data Streams to Amazon S3 addresses this with proven native components.

Why Use AWS Kinesis and S3 Together?

Kinesis Data Streams (shards for scalable, parallel ingestion) fits naturally as an entry point for real-time data — API logs, financial ticks, and sensor feeds, among others. S3 provides cost-efficient, durable storage with virtually unlimited capacity, tight integration with AWS analytics tools (Athena, EMR, Redshift Spectrum), and straightforward partitioning.

Storing data raw is rarely enough: transformation, error handling, and partitioning prepare the data for downstream consumption. Here’s how to orchestrate a reliable pipeline using these AWS-native building blocks.

1. Kinesis Data Stream: Ingestion Backbone

Start by provisioning a Kinesis Data Stream sized to your initial workload. Each shard delivers up to 1MB/sec write and 2MB/sec read throughput, plus up to 1,000 PUT records/sec. Optimize for your use case — e.g., heavy logs often warrant 4–8 shards at launch; low-volume telemetry might need only one.

Implementation:

aws kinesis create-stream \

--stream-name app-telemetry-v1 \

--shard-count 4

Monitor GetRecords.IteratorAgeMilliseconds in CloudWatch — if the metric grows, partitioning or processing is lagging. (Side Note: Sudden ProvisionedThroughputExceededException errors typically indicate mis-sized—or "hot"—shards.)

Partition keys: Choose a field that distributes load — region, deviceId, or customerId. A "power-user" partition key will bottleneck that shard.

2. Kinesis Data Firehose: Automated Delivery

For durable, managed sinking to S3, Kinesis Data Firehose (PUT records, buffering, retries, format conversion) provides a higher-level abstraction than direct consumers.

Firehose integrates natively with Data Streams since early 2020 (check region support), allowing producers and consumers to decouple. Buffering is tunable by size (e.g., 1–128MB) or time (60–900 seconds); smaller buffers decrease latency at the cost of more S3 PUT operations (and therefore higher cost).

Key configuration fields:

- Source: Kinesis Data Stream (not direct ingestion unless simplicity trumps the need for transformation/replay).

- Buffer Size: 1MB (low-latency) or 5MB+ (cost-efficient).

- Destination: S3 URI (versioned buckets recommended for rollback).

- Compression: Enable GZIP to reduce storage size, especially if downstream tools support decompression.

- Format conversion (optional): JSON to Parquet/ORC. Parquet cuts both storage and query cost if Athena usage is expected.

Example (AWS Console or Terraform preferred, for clarity—see the attached table for config fields.)

Known issue: Firehose delivers only the first 5xx error message for S3 failures. Custom metrics via Lambda may be required for granular failure diagnosis.

3. Inline Transformations Using Lambda

Batched, serverless transformations are supported natively within Firehose. For high-throughput, lightweight processing — field filtering, type casting, schema adaptation — use Lambda. Example: enrich clickstream records with geo data, or filter by event type.

Sample Lambda (Python 3.9 runtime):

import base64

import json

def lambda_handler(event, context):

output = []

for rec in event['records']:

raw = base64.b64decode(rec['data']).decode('utf-8')

try:

d = json.loads(raw)

except Exception:

# Optionally log parse error, but drop record

output.append({

'recordId': rec['recordId'],

'result': 'Dropped',

'data': ''

})

continue

if d.get('type') == 'purchase':

d['etl_timestamp'] = context.aws_request_id

enc = base64.b64encode(json.dumps(d).encode('utf-8')).decode('utf-8')

output.append({

'recordId': rec['recordId'],

'result': 'Ok',

'data': enc

})

else:

output.append({

'recordId': rec['recordId'],

'result': 'Dropped',

'data': ''

})

return {'records': output}

Trade-off: Lambda must process batches <6MB; anything slower than a few hundred ms/record will bottleneck Firehose and risk data loss (monitor DeliveryToS3.RecordsBehindLatest).

4. Firehose-To-S3 Delivery: Layout and Buffering

Set the Firehose destination to S3; specify a bucket with clear, timestamp-based partitioning. Firehose natively supports Hive-style partitions to organize data.

Recommended S3 prefix:

s3://telemetry-archive/app-telemetry-v1/year=2024/month=06/day=15/hour=10/

Buffer tuning example:

| Use Case | Buffer Size (MB) | Buffer Interval (sec) | Latency | S3 Cost |

|---|---|---|---|---|

| Low-latency BI | 1 | 60 | ~1m | Higher |

| Cost-efficient | 10 | 300 | ~5m | Lower |

Lifecycle policies: Expire or transition objects to Glacier after 30+ days if not queried regularly.

Compliance tip: Enable server-side encryption (SSE-KMS), require SSL in bucket policy, and log access using CloudTrail.

5. Practical Cost Controls

- Shard count: Don’t launch with 32 shards unless it’s a migration or spike event. Four is typical for tens of MBps aggregate. Monitor and adjust weekly.

- Firehose buffers: Large buffers cut S3

PUTcosts, but may introduce several minutes' delay. For data lakes, latency is rarely urgent; for dashboarding, tune low. - Data formats: Parquet yields dramatic savings in Athena/Glue, but increases Lambda cold-start time for schema inference.

- Error monitoring: Create CloudWatch alarms for

PutRecord.Errors,LambdaInvocationErrors,DeliveryToS3.DataFreshness. - Transformation discipline: Heavy ETL jobs should run in batch (Glue/EMR) post-landing. Inline Lambda is for simple schema tweaks.

Example: Troubleshooting a Production Incident

At scale (>500 records/sec, ~5MB/sec), a common operational pitfall is silent Lambda timeouts:

{

"errorMessage": "Task timed out after 60.06 seconds"

}

This indicates a mis-specified memory/cpu setting or an inefficient transformation. Refactor logic to minimize per-record processing and consider buffering in Kinesis for replay while patching.

Alternatives and Non-Obvious Optimizations

- Direct Firehose ingestion (skipping Data Streams) is viable for non-critical sources, but for auditability, replay, and advanced filtering, Kinesis Data Streams + Firehose is more flexible.

- Deploying partitioned S3 buckets upfront (using an S3 Lifecycle rule) simplifies downstream Athena queries and keeps costs predictable.

- For very high throughput (>MBps tier), consider splitting traffic among multiple Data Streams, then unifying in S3 Hive partitions.

Summary

Combining Kinesis Data Streams, Kinesis Firehose, Lambda transformations, and S3 storage delivers a low-ops, high-reliability analytics backbone. This architecture scales with input load (by shard and buffer tuning), provides fault tolerance (with retry mechanisms), and remains cost-effective through careful sizing and compression.

There is no single “best configuration”—monitor, iterate, and document each tweak. For automated deployment, start with a prebuilt CloudFormation or Terraform template and adapt for workload spikes.

Note: For example templates or advanced multi-stage streaming (with Kinesis Analytics, Kafka bridges, etc.), contact your AWS solution architect or review the public Terraform Registry.

ASCII Visual: Data Flow

[Producer Apps] ──> [Kinesis Data Streams] ──> [Firehose + Lambda] ──> [S3 Bucket]

Data is buffered, optionally transformed, and landed into partitioned, encrypted S3 buckets for downstream analytics.

Questions or need concrete IaC examples? Continue with parameterized templates for rapid redeploys — the design isn't static, nor should your monitoring be.