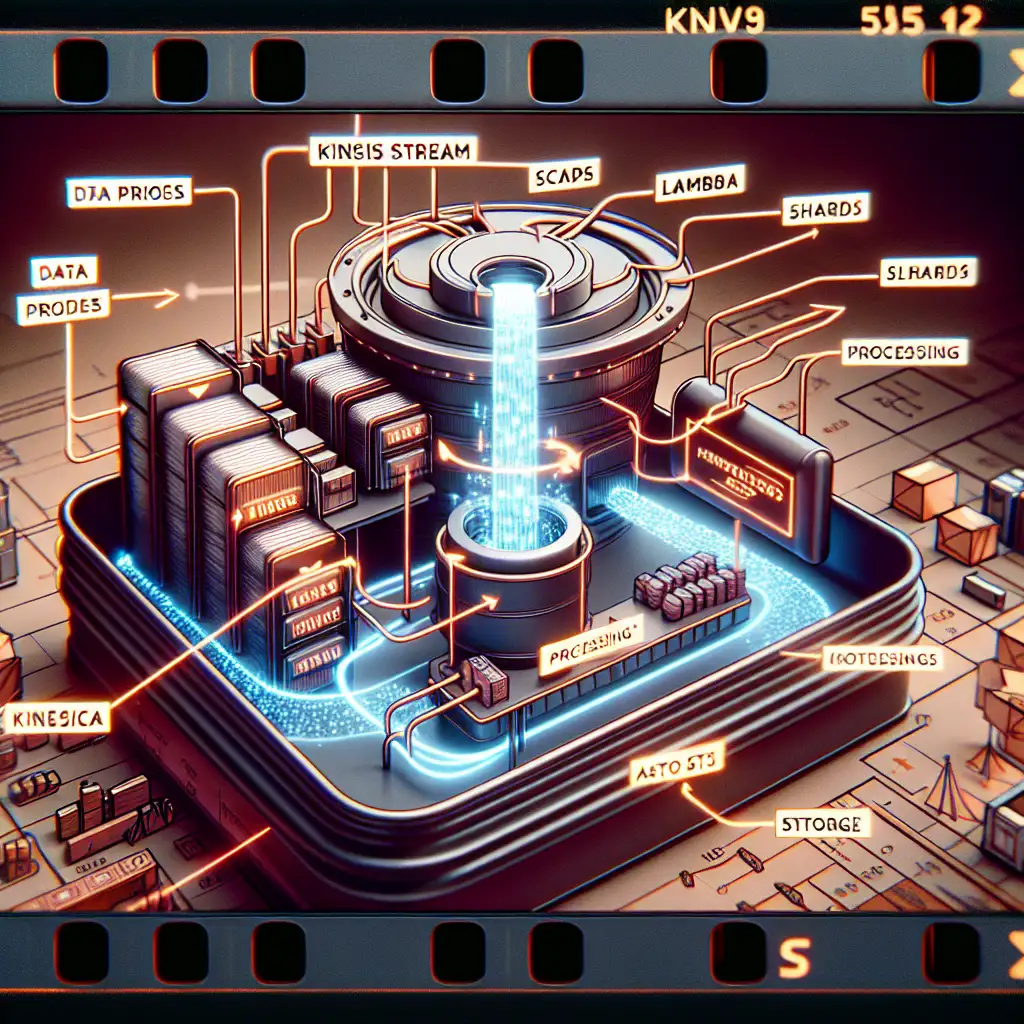

How to Architect a Cost-Effective, Scalable Pipeline from AWS Kinesis to S3 for Real-Time Analytics

Most engineers either overspend on streaming solutions or build brittle pipelines. This guide cuts through the noise to show a pragmatic, scalable approach to ingesting, transforming, and storing streaming data using AWS-native tools. Efficiently moving streaming data from AWS Kinesis to Amazon S3 is foundational for modern analytics workflows, enabling actionable insights without breaking the bank or sacrificing scalability.

Why AWS Kinesis to S3?

AWS Kinesis is a powerful service designed for real-time ingestion of streaming data — think clickstreams, telemetry, logs, sensor data, and more. But raw streams aren’t enough: you need reliable storage for downstream analytics and machine learning. Amazon S3 is the obvious choice here due to its durability, cost-effectiveness, and integration with analytic tools like Athena and Redshift Spectrum.

The challenge: architecting a streaming pipeline that is:

- Cost-effective: Avoid wasteful provisioning or expensive redundant services.

- Scalable: Able to handle variable throughput without bottlenecks.

- Maintainable: Simple components that don’t require constant babysitting.

- Real-time enough: Minimal delays between ingestion and storage.

This post takes you step-by-step through building such a pipeline leveraging AWS native services: Kinesis Data Streams, Kinesis Data Firehose, Lambda (for transformation), and S3.

Step 1: Set Up Your Kinesis Data Stream

Kinesis Data Streams is the backbone of ingestion. It stores data shards that allow concurrent writes and fan-out consumption.

Key Points:

- Create a Kinesis Data Stream with an appropriate shard count. Start with 1-2 shards; scale up if your write throughput grows (each shard supports 1MB/sec write).

- Partition keys determine how records are distributed across shards — pick something like user ID or region to balance input load.

Example AWS CLI command:

aws kinesis create-stream --stream-name my-stream --shard-count 2

Step 2: Choose Delivery Mechanism: Kinesis Data Firehose

For simple delivery directly to S3 with built-in buffering and retry mechanisms, Kinesis Data Firehose shines. It natively ingests from either your Kinesis Data Stream or other sources and deposits data into S3 with minimal fuss.

Why Firehose?

- Automated buffering by size (e.g., 5MB) or time (e.g., 300 seconds)

- Automatic retries & error handling

- Seamless conversion (optional) JSON → Parquet/ORC for efficient storage

- Compression support (GZIP/Snappy)

Firehose can consume directly from your producers, but integrating it downstream of Kinesis Data Streams provides better control over transformation and replay.

Step 3: Transform Your Streaming Data (Optional but Recommended)

Often raw data requires lightweight transformation (filtering fields, parsing JSON, enriching records). You can use AWS Lambda as a transformation layer integrated with Firehose:

- Configure Firehose with a Lambda function for transformation.

- The Lambda processes incoming batches (<6 MB total per invocation) and returns transformed batches.

- Firehose puts transformed records into S3.

Example Lambda snippet in Python:

import base64

import json

def lambda_handler(event, context):

output = []

for record in event['records']:

# Decode base64

payload = base64.b64decode(record['data']).decode('utf-8')

data = json.loads(payload)

# Example transformation: filter only purchase events

if data.get('event_type') == 'purchase':

# Add new field or modify existing fields as needed

data['processed_at'] = context.aws_request_id

output_record = {

'recordId': record['recordId'],

'result': 'Ok',

'data': base64.b64encode(json.dumps(data).encode('utf-8')).decode('utf-8')

}

else:

# Drop non-purchase events by dropping record

output_record = {

'recordId': record['recordId'],

'result': 'Dropped',

'data': ''

}

output.append(output_record)

return {'records': output}

Deploy this Lambda and attach to Firehose via console or CLI.

Step 4: Configure Firehose Delivery Stream Targeting S3

Set up your Firehose delivery stream:

- Choose source as your existing Kinesis Data Stream.

- Attach the Lambda transform function.

- Define your destination bucket in Amazon S3.

- Optimize buffer size/time based on latency requirements vs cost tradeoffs. Smaller buffers reduce latency but increase PUT request counts and thus cost.

- Enable compression (e.g., GZIP) to reduce storage footprint.

- Optionally enable data format conversion (JSON → Parquet) if you want efficient columnar storage for analytics later.

Example console screenshot notes:

- Buffer hints: For near real-time use case — set buffer size ~1 MB and buffer interval ~60 seconds.

Step 5: Organize Your S3 Buckets for Analytics

Set sensible folder structures by timestamp (partitioning) to keep things query-friendly in Athena:

s3://your-bucket/stream-name/year=2024/month=06/day=15/hour=14/

Firehose supports dynamic partitioning if configured.

Additionally:

- Set lifecycle policies on your bucket — transition older logs to Glacier or delete after retention period.

- Enable server-side encryption for compliance/security.

Cost Control Tips

- Right-size shards: Don’t start with dozens of shards unless necessary; each shard costs money.

- Buffer sizing: Adjust Firehose buffer intervals wisely — larger buffers save PUT requests but increase latency.

- Use compression & Parquet: Reduce both storage cost and query time when running Athena queries.

- Leverage CloudWatch metrics: Monitor

IncomingRecords,DeliveryToS3.Bytes,WriteProvisionedThroughputExceededalarms to detect bottlenecks early. - Avoid unnecessary Lambda invocations: Keep transformations lightweight; complex heavy-lifting can be done offline instead of in-stream.

Final Thoughts

By combining AWS Kinesis Data Streams + Kinesis Data Firehose + Lambda + S3 thoughtfully, you build a streaming pipeline that's:

- Reliable: Managed retries keep data flowing smoothly under faults.

- Scalable: Easily scale up/down shard count & Firehose throughput dynamically.

- Cost-effective: Native AWS integrations reduce management overhead & wasteful over-provisioning.

- Flexible: Transformation hooks enable real-time ETL before landing files into cheap storage.

This pattern forms an excellent foundation for all kinds of real-time analytics workloads — whether powering dashboards via Athena/QuickSight or feeding ML pipelines downstream.

If you want a starter CloudFormation template or example scripts next, let me know! Happy streaming! 🚀