Leveraging AWS Lambda for Serverless Application Scaling

Stop provisioning EC2 instances just to handle background jobs, scheduled tasks, or spiky event-driven workloads. With AWS Lambda, operational overhead is nearly eliminated: write function code, define triggers, and let AWS deal with the rest—automatic scaling, high availability, and pay-per-use billing.

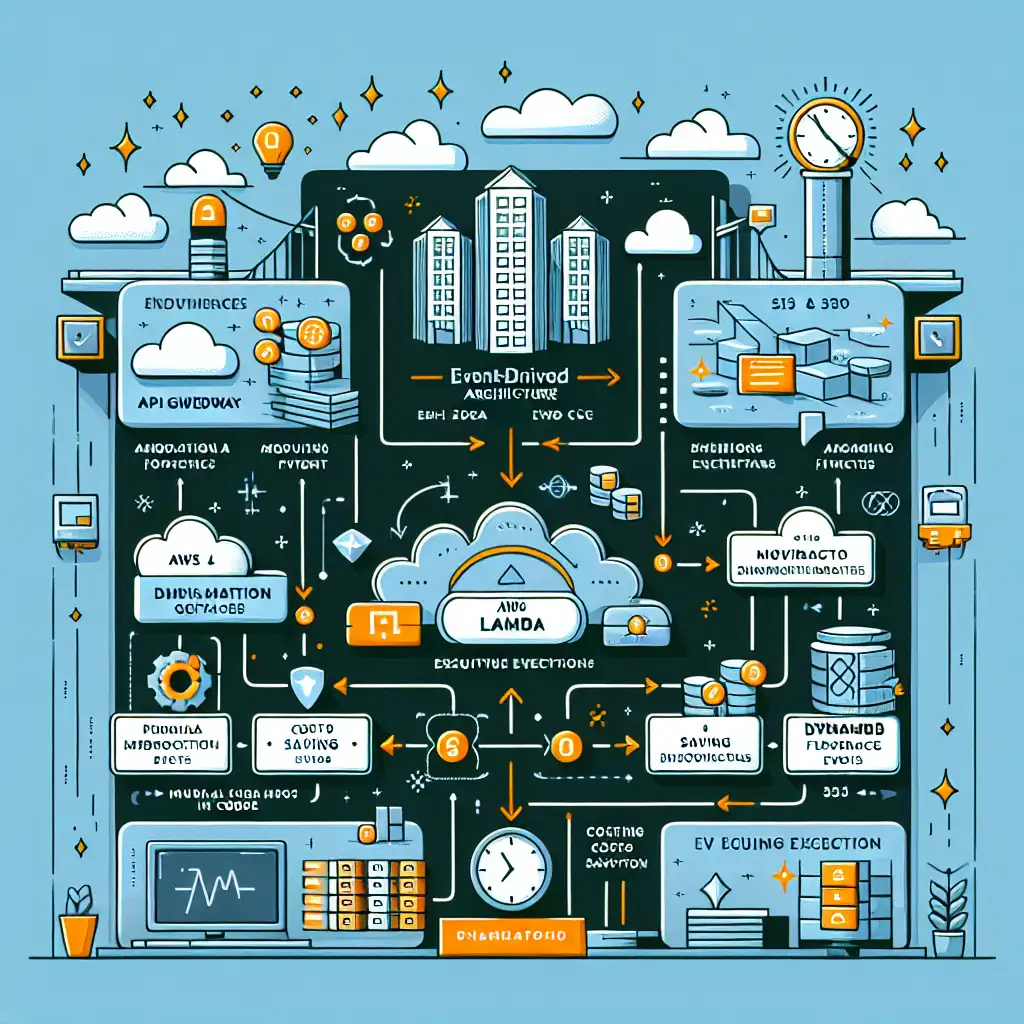

AWS Lambda: Architecture and Real-World Benefits

AWS Lambda is an ephemeral compute service where code executes in managed containers triggered by events across AWS services—HTTP requests via API Gateway, S3 object creation, DynamoDB streams, or custom CloudWatch Events, among others.

Key operational impacts:

- No server patching or capacity planning.

- Instant horizontal scaling, including burst loads.

- Subsecond billing granularity (as of Node.js 18.x runtime).

- Integrated with AWS IAM for fine-grained permissions.

Cost model aligns to true usage, not reserved capacity.

Example: Stateless API Greeting Endpoint

The following example creates a minimal Node.js Lambda handler to process a POST payload such as:

{ "name": "Alice" }

Code (index.js):

exports.handler = async (event) => {

let name

try {

const body = typeof event.body === 'string' ? JSON.parse(event.body) : event.body

name = body?.name || 'World'

} catch (err) {

return { statusCode: 400, body: JSON.stringify('Bad Request') }

}

return {

statusCode: 200,

body: JSON.stringify(`Hello, ${name}! Welcome to AWS Lambda.`),

}

}

Note: Input parsing must handle both stringified and object bodies depending on the trigger source (API Gateway vs. test event).

Deploying a Lambda Function

Prerequisites

- AWS CLI (

aws-cli/2.13.xor later) - Node.js 18.x LTS

- AWS IAM user with

AWSLambda_FullAccess - Optional:

zip,jqutilities

Deployment steps:

-

Package code (with dependencies, if any):

zip function.zip index.js -

Create IAM execution role (if not pre-provisioned):

aws iam create-role --role-name lambda-greeter-role \ --assume-role-policy-document file://trust-policy.json aws iam attach-role-policy --role-name lambda-greeter-role \ --policy-arn arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRoleGotcha: Policy propagation can take several seconds. Expect occasional

AccessDeniedExceptionif you deploy functions immediately after role creation. -

Create Lambda:

aws lambda create-function \ --function-name greetingFunction \ --runtime nodejs18.x \ --handler index.handler \ --role arn:aws:iam::<ACCOUNT_ID>:role/lambda-greeter-role \ --zip-file fileb://function.zip

Testing Lambda Locally and Remotely

Console test event:

Sample payload:

{

"body": "{\"name\":\"Alice\"}"

}

CLI invocation:

aws lambda invoke \

--function-name greetingFunction \

--payload '{"body": "{\"name\": \"Bob\"}"}' \

output.json

cat output.json

Response sample:

{"statusCode":200,"body":"\"Hello, Bob! Welcome to AWS Lambda.\""}

Note: If input is malformed, expect error response as coded. Errors are surfaced in CloudWatch Logs by default.

Exposing the Function via HTTP API (API Gateway)

Connect Lambda to REST/HTTP endpoints without running nginx or Express.

Steps:

- In API Gateway, create REST API.

- Resource path:

/greet - Method:

POST→ Integration type: Lambda function (greetingFunction) - Enable “Lambda Proxy Integration” for direct event forwarding.

- Deploy API to a stage.

Tip: Cold starts for Node.js functions (~100–500ms) are typical unless provisioned concurrency is configured. Provisioned concurrency increases cost but eliminates unpredictable startup latency.

Cost, Tuning, and Non-Obvious Trade-offs

- Memory & CPU: Increasing memory also increases vCPU; sometimes allocating 512MB instead of default 128MB will halve total execution time, reducing cost for short-lived functions.

- Timeout: Default is 3 seconds; max is 15 minutes. Don’t let functions linger.

- Environment variables: Store secrets/configuration there, or use Parameter Store (for rotation support).

- Dependencies: Excessive package size bloats cold starts. Prune your

node_modulesand avoid bundling unnecessary libraries.

| Parameter | Default | Recommendation |

|---|---|---|

| Memory | 128 MB | 256–512 MB for most API |

| Timeout | 3 sec | <10 sec for web APIs |

| Ephemeral storage | 512 MB | Increase if temp files |

Real-world log sample (CloudWatch):

START RequestId: xxx Version: $LATEST

2024-06-11T14:23:42.753Z INFO Lambda invocation details: { requestId: 'xxx', function: 'greetingFunction' }

END RequestId: xxx

REPORT RequestId: xxx Duration: 3.61 ms Billed Duration: 4 ms Memory Size: 256 MB Max Memory Used: 46 MB

Notice that billed duration is always rounded up to the next ms.

Operational Notes

- Monitor invocations and error rates with CloudWatch metrics.

- For real-time alerting, configure SNS triggers on Lambda failures.

- Set up dead-letter queues (DLQ) for failed asynchronous invocations.

Known issue: Large deployment packages (>50MB zipped, 250MB extracted) are cumbersome—use Lambda Layers for shared libraries.

Summary

AWS Lambda simplifies event-driven compute—no EC2 management, intrinsic fault tolerance, and cost directed by actual usage. Paired with API Gateway, it’s a practical fit for stateless HTTP endpoints, periodic tasks, and automating AWS workflows.

For robust production deployments, optimize memory/timeout, enforce dependency hygiene, and monitor logs for anomalies. Alternative serverless execution environments (e.g., Google Cloud Functions, Azure Functions) have similar capabilities, but integration with broader AWS services tips the scale for most AWS-centric architectures.

Don’t expect Lambda to be perfect for all use cases—heavyweight stateful or low-latency workloads may require containerized or VM-based approaches—but within its domain, it’s hard to match for flexibility and operational efficiency.