Athena Query Results: Automating Reliable Exports to Amazon S3

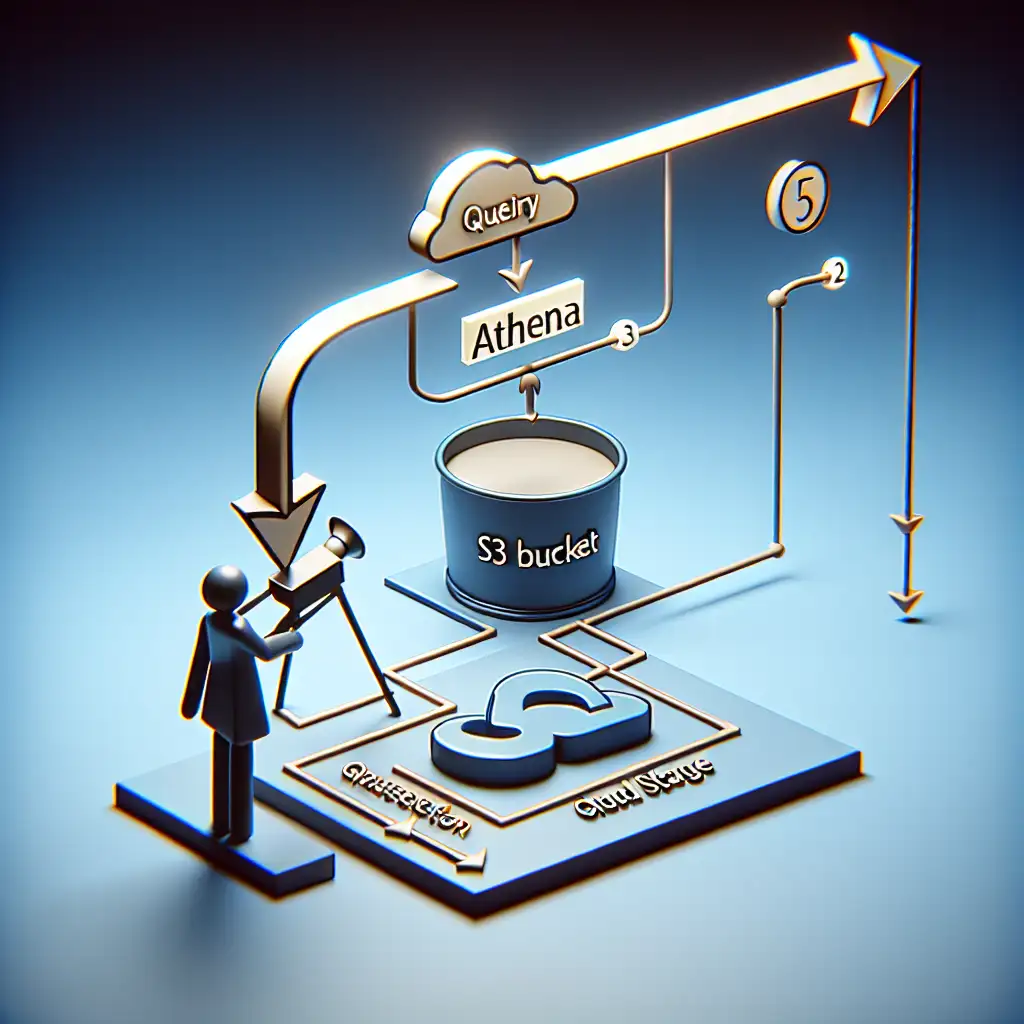

Manual download of AWS Athena query results? Not sustainable at scale. Automating Athena-to-S3 exports cuts friction, ensures auditable data provenance, and directly supports robust analytics pipelines.

Typical scenario: Analysts pull daily sales aggregates from Athena. Each result must be archived in S3 for compliance and further processing by Glue jobs. Manual handling wastes engineering time and introduces risk of data loss.

Core Reasons to Automate Athena Exports

- Immediate S3 Integration: Query results delivered straight to S3; zero manual intervention.

- Cost & Scale: Athena's default query results location is short-lived and ill-suited for production retention. S3's tiered storage and lifecycle policies are designed for this workload.

- Data Toolchain Readiness: S3 is the common denominator for downstream tools—Redshift Spectrum, AWS Glue ETL, EMR Spark clusters.

- Operational Efficiency: Fewer manual steps, fewer mistakes.

Note: Athena query result exports are always written to S3; the only question is location and automation. Using a dedicated bucket or prefix avoids accidental overwrites and enables fine-grained access control.

Implementation: Reliable Athena-to-S3 Automation

1. S3 Bucket Configuration

Provision a dedicated S3 bucket or prefix with restricted access for Athena:

s3://my-athena-query-results/exports/

IAM policy must grant athena:StartQueryExecution and relevant S3 write permissions to whatever principal (user, role, Lambda) will execute queries.

Tip: Use versioning on the bucket for compliance.

2. Automate Query Execution with SDK or CLI

Programmatic execution—using Python boto3, AWS CLI, or even a CI/CD pipeline—enables reproducibility and scheduling.

Example: Python, boto3 ≥1.26, Python 3.9

import boto3

import time

ATHENA_OUTPUT = "s3://my-athena-query-results/exports/"

athena = boto3.client('athena', region_name="us-west-2")

def run_query(sql, db, s3_output):

resp = athena.start_query_execution(

QueryString=sql,

QueryExecutionContext={'Database': db},

ResultConfiguration={'OutputLocation': s3_output}

)

return resp['QueryExecutionId']

def await_query(qid):

while True:

status = athena.get_query_execution(QueryExecutionId=qid)['QueryExecution']['Status']['State']

if status == 'SUCCEEDED':

return True

if status in ('FAILED', 'CANCELLED'):

return False

time.sleep(2)

SQL = "SELECT year, count(*) as cnt FROM sales GROUP BY year"

qid = run_query(SQL, "my_db", ATHENA_OUTPUT)

if await_query(qid):

print(f"Output: {ATHENA_OUTPUT}{qid}.csv")

else:

print(f"Query {qid} failed")

- Output filename = QueryExecutionId. Athena writes output as CSV or Parquet (if specified), e.g.:

s3://my-athena-query-results/exports/56dcabcd-11d5-4d28-bc21-e9d7fb08631a.csv - Error handling is minimal above; in practice, trap and log boto3 exceptions.

Gotcha: If you omit the ResultConfiguration, Athena defaults to the workgroup output location. Don’t assume; set this field.

3. Event-Driven Processing: S3 Triggers & Lambda

For true pipeline automation:

- S3 Event Notification: Configure S3 to emit an event (e.g.,

s3:ObjectCreated:Put) for your results prefix. - AWS Lambda: Lambda function processes the results—optionally loads them into Redshift, notifies stakeholders, or cleans up.

Config Example:

- S3 bucket with notification to Lambda ARN.

- Lambda function with IAM permissions to fetch from S3 and run required postprocessing.

Lambda Skeleton (Python 3.12 runtime):

import boto3

def handler(event, context):

for record in event['Records']:

bucket = record['s3']['bucket']['name']

key = record['s3']['object']['key']

# Example: trigger downstream job here

print(f"New Athena result: s3://{bucket}/{key}")

Operational Tips

- Use Compressed/Columnar Output: Set Athena output to

ParquetorORC. Example:

Reduces downstream cost by 5–10x per GB.ResultConfiguration={'OutputLocation': s3_output, 'OutputFormat': 'PARQUET'} - Rotate/Clean Orphan Results: Use S3 lifecycle rules to expire old objects; Athena doesn’t GC failed queries.

- Access Control: Lock output prefix to known principals. Broad S3 write access can lead to corruption or data exfiltration.

- Logging: Enable S3 server access logs for auditing.

Known Issues & Trade-offs

- Large Result Sets: Queries exceeding S3 multipart upload limits (5GB/object) will fail; for partitions, use CTAS to generate multiple files.

- Cost Traps: Retaining all historical results in S3 Standard is expensive—set lifecycle to Glacier or Intelligent-Tiering where appropriate.

- Workgroup Collisions: Default workgroup output settings can override

ResultConfigurationif misconfigured.

Recap

Direct, programmatic export of Athena query results to purpose-built S3 locations is standard practice for production-grade AWS data platforms. It’s repeatable, auditable, script-friendly, and minimizes human error. Event-based triggers with Lambda unlock full end-to-end automation—though monitoring and resource clean-up require diligence.

Alternative: For ad-hoc needs, consider Athena’s built-in “Download results” from the console, but this isn’t recommended for production.

Practical tip: Occasionally, manual inspection of raw S3 results reveals schema drift or serialization bugs not caught by downstream consumers. Always verify the first few exports in a new pipeline.

If you require modular DAG-based orchestration (e.g. with AWS Step Functions), adapt the above logic—Lambda can hand off success/failure to a Step Functions state machine for complex error handling and branching.