How to Seamlessly Automate Data Sync from GCP Cloud SQL to BigQuery Without Dumping CSVs

Forget the old export-import hustle—master a streamlined, automated pipeline using native GCP tools to keep your BigQuery datasets fresh and reliable without a single manual step.

Data is at the heart of every data-driven organization. However, ensuring that data from your transactional database (Cloud SQL) flows into your analytics engine (BigQuery) efficiently, timely, and reliably can sometimes be a headache. Exporting data as CSVs manually, uploading them to Cloud Storage, and then importing into BigQuery is slow, error-prone, and far from scalable.

In this post, I'll guide you through how to automate the synchronization of data between Google Cloud SQL and BigQuery seamlessly, without the cumbersome CSV export-import routine. This approach eliminates manual handling, speeds up your analytics workflow, and ensures decision-makers always have fresh insights at their fingertips.

Why Automate Data Sync from Cloud SQL to BigQuery?

- Speed: No more waiting for manual exports.

- Reliability: Automated jobs reduce human error.

- Near Real-Time Insights: Your BigQuery datasets stay updated without lag.

- Cost Efficiency: Reduce storage costs by avoiding intermediate CSVs.

- Simpler Maintenance: One source of truth with automated pipelines.

How Does This Work?

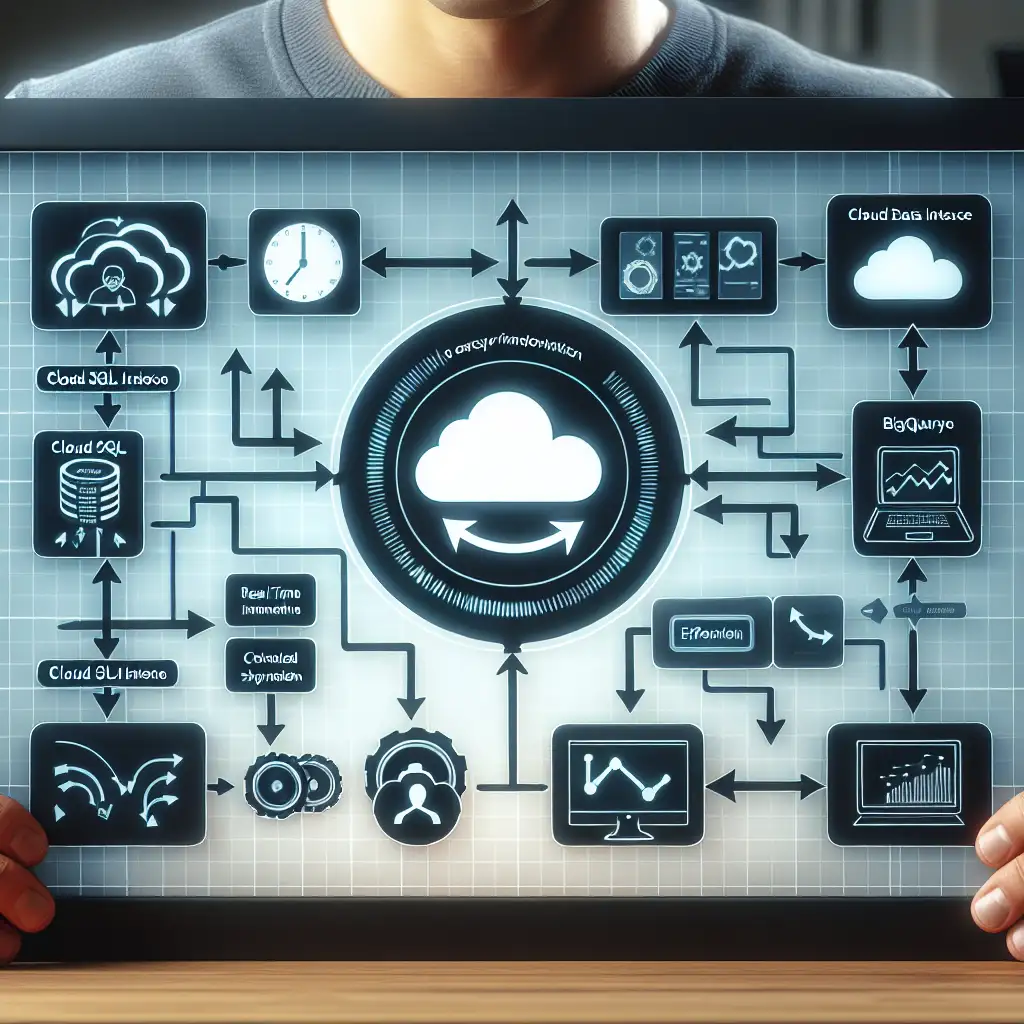

We’ll leverage native GCP products to build a pipeline that pushes data updates directly from Cloud SQL to BigQuery:

Common Approaches (and why we avoid CSV)

| Approach | Pros | Cons |

|---|---|---|

| Manual CSV Export/Import | Easy for small datasets | Tedious, error-prone |

| Scheduled Cloud SQL Export + GCS -> BigQuery Load | Automatable | Latency; extra storage cost |

| Direct Streaming via Dataflow or Datastream | Near real-time; scalable | Requires some setup & expertise |

Our goal: avoid dumping intermediate CSV files altogether by using Google Cloud Datastream + Dataflow or Cloud SQL federated queries in BigQuery, depending on your needs.

Method 1: Use Datastream + Dataflow for Change Data Capture (CDC)

This is ideal for continuously syncing live transactional changes from Cloud SQL (MySQL or PostgreSQL) into BigQuery.

Step-by-Step Guide

1. Enable APIs

Make sure these APIs are enabled in your GCP project:

- Datastream API

- Dataflow API

- BigQuery API

2. Configure Datastream Source & Destination

- Source: Your Cloud SQL instance (MySQL or PostgreSQL)

- Destination: A BigQuery dataset or a Cloud Storage bucket temporarily (Datastream currently supports GCS as destination)

Datastream captures change events (inserts/updates/deletes) as they happen in the source DB.

3. Set Up Dataflow Pipeline

Google provides a Dataflow template called Datastream to BigQuery which reads CDC logs from GCS and applies them to BigQuery tables incrementally.

# Example CLI command snippet to launch the Dataflow job:

gcloud dataflow jobs run my-datastream-pipeline \

--gcs-location gs://dataflow-templates/latest/Datastream_to_BigQuery \

--region=us-central1 \

--parameters=\

inputFilePattern=gs://datastream-bucket/path-to-cdc-files/*,\

outputTable=myproject:mydataset.mytable,\

bigqueryLoadingTemporaryDirectory=gs://my-temp-bucket/temp/

This pipeline applies ongoing DB changes directly into your target BigQuery table without needing CSV intermediates.

4. Query Fresh Data in BigQuery

Your target table mydataset.mytable will update near real-time with all new transactions committed in Cloud SQL.

Method 2: Federated Queries with Cloud SQL External Tables in BigQuery

If you need near real-time access but can tolerate slightly slower query performance for small datasets, federated queries connect BigQuery directly to Cloud SQL:

How It Works:

You create an external connection in BigQuery that references tables inside your Cloud SQL instance — allowing you to query live data using standard SQL without moving it.

CREATE CONNECTION my_cloudsql_conn

OPTIONS (

connection_type='cloud_sql',

cloud_sql_instance='my-project:us-central1:my-sql-instance',

database='mydatabase'

);

CREATE EXTERNAL TABLE `mydataset.external_table`

CONNECTION `my_cloudsql_conn`

OPTIONS (

table_name='customers'

);

-- Now you can query:

SELECT * FROM `mydataset.external_table` WHERE created_at > '2023-01-01';

Pros:

- Instant access to most recent data

- No duplication required

Cons:

- Query performance depends on the source DB

- Not suitable for very large joins or complex analytical workloads

When to Choose Which?

| Scenario | Recommended Approach |

|---|---|

| Need near-real-time sync on active DB | Datastream + Dataflow CDC pipeline |

| Ad-hoc queries on recent transactional data | Federated queries |

| Periodic batch sync tolerable | Scheduled exports/load jobs |

Bonus Tip: Keep Your Schema in Sync

Schema changes between Cloud SQL and BigQuery can cause headaches in syncing pipelines. Use automation tools like Liquibase or Flyway to version-control your database schema changes and make sure corresponding changes reflect in your BigQuery tables.

Or build automated schema update steps as part of your CI/CD pipeline for ETL workflows.

Summary

By leveraging Google Cloud’s native services like Datastream and Dataflow (or alternatively federated external tables), you can automate syncing data from Cloud SQL directly into BigQuery:

- Avoid manual CSV exports

- Eliminate file management overhead

- Enable faster analytics workflows with near real-time insights

- Make operational analytics reliable and scalable

Happy querying! Have questions or want me to cover setup details on any part? Drop a comment below or reach out via my socials!

Useful Links:

- Cloud Datastream Documentation

- Dataflow Templates - Datastream CDC

- BigQuery External Connections

- Cloud SQL overview

Streamline your data journey — don’t sweat the sync!