Optimizing Data Pipelines: Efficiently Loading AWS Glue Transformed Data into Amazon Redshift

Why Most AWS Glue to Redshift Data Transfers Fall Short — and How to Fix Them for Real-Time Analytics Success

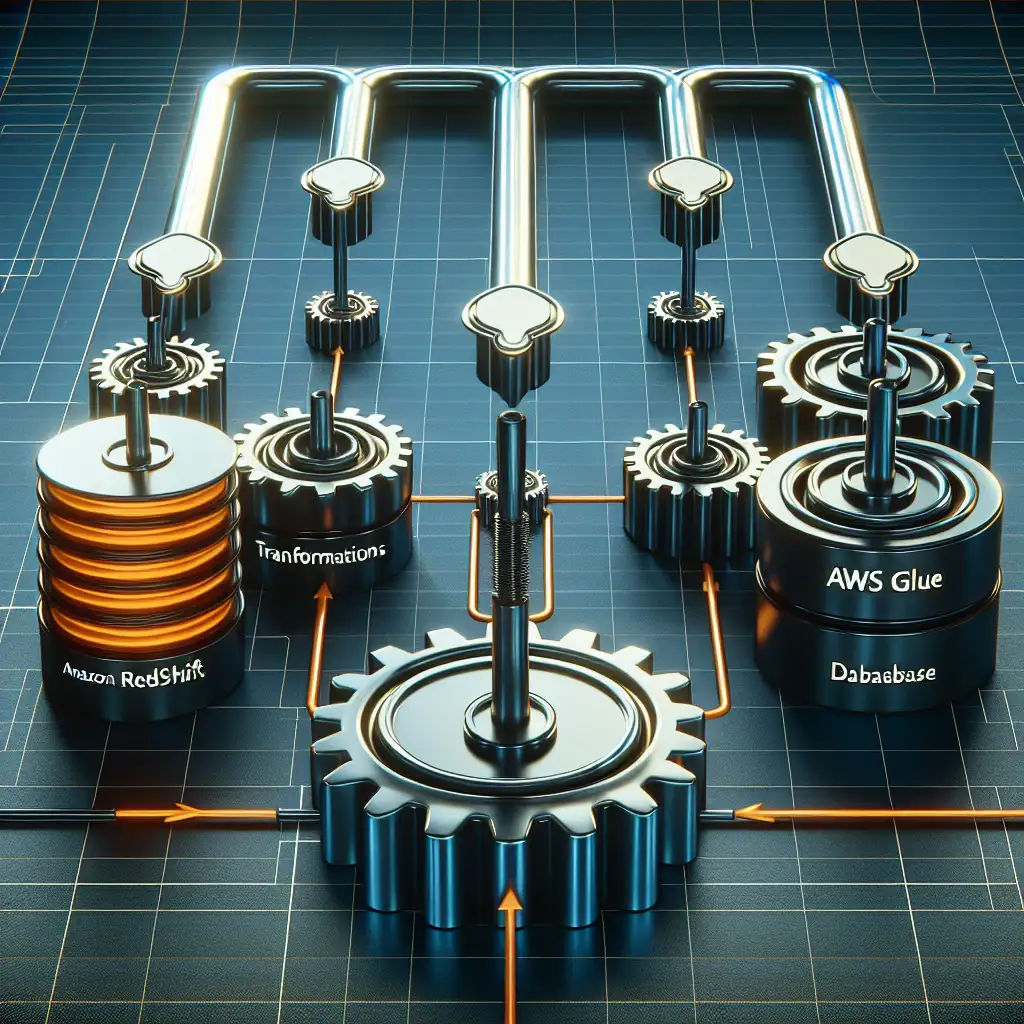

When building modern data warehouses, the integration between your ETL platform and your analytics database can make or break your data strategy. AWS Glue, a fully managed ETL service, is incredibly powerful for transforming raw data at scale. Amazon Redshift, on the other hand, powers fast, scalable analytics with SQL-based queries.

Yet despite their individual strengths, many organizations struggle with the “Glue to Redshift” data pipeline. The result? Bottlenecks in loading transformed data into Redshift that inflate costs and delay time-to-insight.

In this post, I’ll share practical ways to troubleshoot common pitfalls and optimize your Glue → Redshift workflows so your transformed datasets flow fast, fresh, and cost-effectively — ideal for real-time analytics scenarios.

Why AWS Glue to Redshift Pipelines Often Lag Behind

Before diving into solutions, let’s quickly unpack typical reasons why this integration misses the mark:

- Inefficient data loading strategies: Using suboptimal loading methods like single-threaded inserts rather than leveraging bulk loads.

- Ignoring data distribution styles: Resulting in skewed workloads in Redshift that slow down query performance.

- Underutilized Glue job orchestration options: Lack of tuning job concurrency and partition awareness increases latency.

- Skipping compression and format optimizations: Raw formats slow down reads/writes unnecessarily.

Best Practices for Smooth Glue → Redshift Data Loads

1. Use Amazon S3 as an Intermediate Staging Area

AWS Glue excels at transforming data and dumping it as optimized files (like Parquet) to S3. Instead of writing directly to Redshift tables via JDBC inserts—which is painfully slow—load your processed datasets first into S3.

Example:

# Inside an AWS Glue ETL script using PySpark

datasource = glueContext.create_dynamic_frame.from_catalog(database="sales_db", table_name="raw_orders")

transformed_dyf = ApplyMapping.apply(frame=datasource,

mappings=[("order_id", "string", "order_id", "string"),

("amount", "double", "amount", "double")])

# Write transformed data as Parquet files partitioned by date

glueContext.write_dynamic_frame.from_options(frame=transformed_dyf,

connection_type="s3",

connection_options={"path": "s3://my-bucket/transformed/orders/",

"partitionKeys": ["order_date"]},

format="parquet")

Staging transformed datasets in S3 sets the stage for high-throughput bulk loads into Redshift using the COPY command.

2. Bulk Load Data into Redshift Using the COPY Command

Redshift’s native COPY command can ingest large batches directly from S3 in parallel—dramatically faster than single inserts.

SQL Example:

COPY sales.orders

FROM 's3://my-bucket/transformed/orders/order_date=2024-06-01/'

IAM_ROLE 'arn:aws:iam::123456789012:role/RedshiftCopyRole'

FORMAT AS PARQUET;

Key tips:

- Make sure AWS Glue writes highly compressed Parquet or ORC files.

- Use IAM roles with least privilege for efficient authorization.

- Partition your data by keys (e.g., date) so you can incrementally load only new partitions daily/ hourly.

Using COPY turns what would be hours of inserts into minutes of massively parallel loads.

3. Optimize Table Design and Distribution Styles in Redshift

Once loaded, query speed depends heavily on table design:

- Choose DISTKEYs based on join keys used most frequently in your analytics queries.

- Use SORTKEYs on timestamp or filter columns to accelerate queries.

- Leverage COMPOUND SORTKEYS if multiple filtering fields are common.

Aligning table schema with expected query patterns reduces data movement within Redshift clusters, reducing runtime drastically.

4. Schedule Incremental Loads Using Glue Triggers and Event-Based Orchestration

Glue workflows shouldn’t rerun entire ETL jobs every time. Instead:

- Use partition-awareness during transformations (filtering source datasets by date).

- Trigger Glue jobs via CloudWatch Events or Lambda based on new file arrivals in S3.

- Combine partitioned file loads with incremental COPY commands targeting only new partitions.

Example CloudWatch Event rule filters new CSV arrivals in an ingest bucket → trigger a Lambda that starts a Glue job transforming just those files → post-process triggers a COPY only for this partition.

This architecture reduces latency significantly—closer to real-time ingestion speeds.

5. Monitor Performance & Tune Job Resources Dynamically

AWS Glue allows you to configure worker types (Standard, G.1X/2X) and number of DPUs (Data Processing Units). Similarly, monitor Redshift cluster health & WLM queue waits:

- Scale up Glue DPUs temporarily for heavy loads.

- Use Amazon CloudWatch & AWS CloudTrail logs to identify bottlenecks.

- Tune WLM slots & concurrency limits in Redshift during peak loads.

Proactive monitoring enables smarter resource usage which cuts both cost and query times.

Wrapping Up: The Optimized Flow at a Glance

- Extract & transform with AWS Glue, write compressed Parquet files partitioned by date/keys into S3

- Use Redshift COPY commands for fast bulk loading from those partition folders

- Design tables optimized for query patterns, selecting appropriate DISTKEY & SORTKEY

- Incrementally load only fresh partitions, triggered via event-driven workflows

- Continuously monitor execution times and scale resources dynamically

Final Thoughts

Most performance issues stem from trying to naively connect ETL directly to Redshift without leveraging staging in S3 + bulk copy strategies plus smart job orchestration.

By using the proven combination of AWS Glue + optimized storage + native Redshift ingestion features, you can build low-latency pipelines feeding near real-time dashboards — critical when every second counts in decision-making.

Ready to unlock high-performance analytics with AWS? Start optimizing your integration today following these best practices!

If you found this useful or have questions about your own architecture, feel free to leave a comment below or reach out!

Happy Data Engineering! 🚀