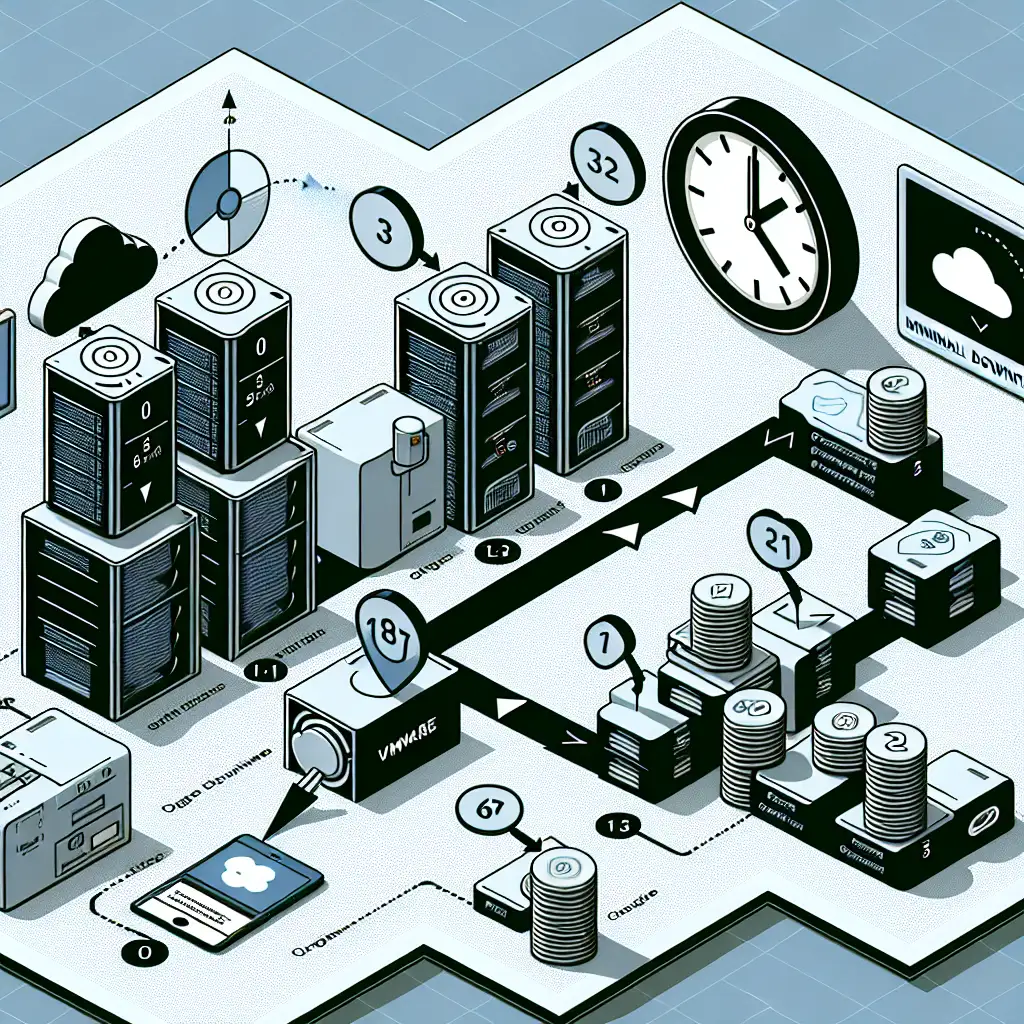

Migrating VMware Workloads to Google Cloud: Proven Patterns for Minimal Downtime

On-premises VMware stacks offer stability, but come up short when rapid elasticity, integrated AI, or global scale become business demands. Migrating to Google Cloud—especially via Google Cloud VMware Engine (GCVE) or Migrate for Compute Engine—enables operational agility and OPEX savings, with the caveat that mismanaged downtime can compromise revenue or reputation.

Broad advice rarely addresses the uncomfortable truth: one misstep in sequencing or testing, and you’re troubleshooting production incidents on a weekend. The following process reflects battle-tested steps honed on real migrations, not vendor slides.

Assessment: No Two Workloads Are Alike

Blind migration is a recipe for pain. Start with a detailed workload inventory—VM specs (CPU/memory/disk), OS versions (e.g., Windows Server 2016, RHEL 8.x), app interdependencies, and network topology diagrams. Use automated tools where possible (Google Migrate for Compute Engine v6.1+, RVTools).

Tabulate critical info. Example:

| VM Name | OS | CPU | RAM | Storage | Dependencies | SLA |

|---|---|---|---|---|---|---|

| ERP-DB01 | RHEL 8.5 | 16 | 128 | 2TB | SAP App, NFS share | 99.99% |

| Web-Store1 | Win2019 | 8 | 32 | 500GB | SQLCluster, Redis | 99.9% |

Gotcha: Don’t ignore “hidden” dependencies—print servers, internal HTTP proxies, or cron jobs on adjacent VMs.

Choosing the Migration Path

There are three main strategies, each with unique trade-offs:

- Lift-and-Shift (Rehost): Fastest route. VMs often migrate unchanged via Migrate for Compute Engine. Compile a list of unsupported configurations (e.g., nested virtualization, rare virtual hardware).

- Replatform: Minimal code changes (e.g., swapping out local disk for Filestore or Cloud SQL post-migration). Slightly longer cutover window.

- Refactor: Rewrite for GKE/Kubernetes or serverless. Out of scope for minimizing downtime—requires staged change management.

In most enterprise cases, lift-and-shift plus immediate VM-level health checks minimize outage risk. Don’t chase “cloud-native” if your app isn’t ready.

Networking: Secure Bandwidth and Route Planning

A migration dies on the altar of network misconfiguration. For large data volumes, default VPNs (Cloud VPN; max 3Gbps per tunnel) become bottlenecks. For anything above 10TB or high IOPS databases, use Dedicated Interconnect (10Gbps+ per link). Plan direct peering to reduce egress latency; test MTU mismatches early.

Example hybrid VPC setup:

gcloud compute networks peerings create onprem-gcp \

--network=default \

--peer-network=onprem-vpc \

--auto-create-routes

“Minor” routing errors often kill push-button cutovers.

Continuous Replication and Delta Sync

Continuous disk replication via Migrate for Compute Engine ensures the production data state is near-pristine just before cutover. Use incremental sync rather than full clones after initial seeding.

- Live migration mode: Supports most OSes, but see [release notes]—Linux 5.10 kernel images may require agent updates.

- Schedule delta syncs during predictable lulls. For 250GB VMs over a 1Gbps link, expect

~35-40 minutesper full sync; increments <5min.

Known issue: Active Directory or database servers frequently show “dirty pages” on disks, causing excessive sync churn.

Staging & Validation: The Hidden Project Sinkhole

Spin up VMs in an isolated GCP project subnet. Validate:

- Network ACLs match original security groups.

- Application components communicate—database ports, SSO endpoints, printer mappings.

- Simulate user traffic with tools like

JMeteror custom scripts. Monitor viaStackdriver Loggingfor anomalies.

Typical overlooked error:

ERROR: Unable to reach license server at 10.0.1.22: TCP Timeout

Firewall tightening in staging causes surprises. Run full regression tests, not just “does it boot.”

Controlled Cutover: Speed with Precision

Schedule cutovers off hours—a Friday 11:59pm window is common, depending on your regional usage patterns. Sequence:

- Notify & freeze: Announce downtime to stakeholders 24h in advance. Place database/app servers in maintenance mode.

- Final delta sync: Use

migratetools to transfer last few MB/GB of changed data. - DNS switch: TTL=60s recommended hours before cutover.

- Power down on-prem VMs. Validate shutoff—sometimes, tools report “powered off” prematurely.

- Power up Google Cloud VMs and monitor health/service endpoints.

Downtime with this process: usually 5-20 minutes per application, depending on backend services and traffic.

Case Example: Multi-Site Retail ERP Migration

A national retailer migrated their SAP-based ERP with ~2TB Oracle backend to Google Cloud using the above methods:

- Assessment: Peak traffic during end-of-month reporting; picked the absolute trough based on six months of logs.

- Replication: 96 hours of initial disk sync; two delta windows. One mysterious backup process increased changed data rate—debugged via verbose logging.

- Testing: Isolated UAT environment deployed; found a WinRM timeout due to stale Kerberos tickets post-migration.

- Cutover: Actual outage was 11 minutes. End-user tickets: zero.

Pro Tips and Non-Obvious Pitfalls

- Script everything. Manual steps breed mistakes. Used Ansible for GCP-side first-boot hardening.

- Rollback is real. Take pre-cutover snapshots using

gcloud compute disks snapshot. Disk IDs change post-migration; scripts must account for it. - Monitor quotas. GCP project-level quotas (CPU, IP addresses) can silently block cutover if overlooked.

- Log everything. Console logs from

Migrate for Compute Enginefrequently surface silent failures:[WARN] Persistent disk resize failed - insufficient IAM permissions - Alternative: For highly stateful or latency-sensitive workloads (e.g., transactional DBs), native replication or hybrid architectures sometimes trump “lift-and-shift.” Evaluate fit case by case.

Migrating VMware workloads to Google Cloud is neither trivial nor perilous—if approached with discipline and a willingness to rehearse. The upfront time spent analyzing, replicating, and staging pays off on migration day, when everything is measured in minutes, not hours.

Start with a non-critical workload, iterate, and don’t skimp on post-migration monitoring. Plenty of imperfect scripts get the job done—perfection is not required; predictability is.

Share lessons learned from your own migration runbooks, or highlight any not-so-obvious blockers you encountered.