There’s an old joke in tech: “If you can’t fix it, feature it.” Funny thing is, in software architecture, that joke sometimes turns into policy.

Lots of teams start with a bold mission: break up the monolith, go full microservices, chase agility, scale, and faster deploys. But many end up right where they began—only now they're older, a little more tired, and buried in YAML files.

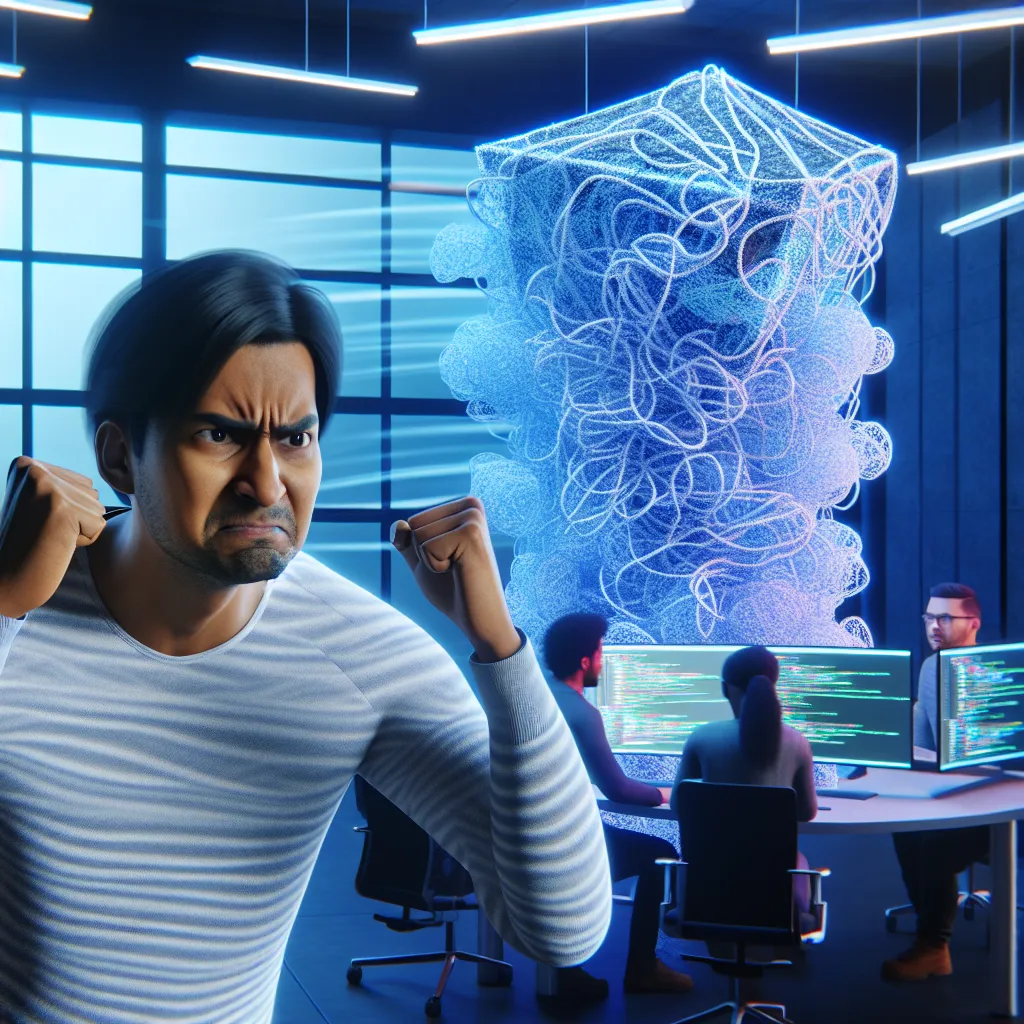

This is the story of those journeys. Not the polished success stories you hear at conferences. The messy middle 80% no one talks about. What went wrong. Why it happened. And how to tell if microservices are just digging your team deeper into tech debt.

The Dream: Breaking Free

It always starts the same way. A shared frustration.

- Releases are slow.

- Code is tangled.

- A traffic spike takes everything down.

Then someone stands up and says it: “Let’s move to microservices.”

The theory sounds great:

- Teams work independently.

- Deploys are quicker.

- Code is modular.

- You only scale what you need.

But reality? It’s rarely that clean.

Case Study #1: When E-Commerce Met Chaos

Let’s talk about “Shopify-ish.” Mid-sized, decent traffic, steady monolith. Then a TikTok post goes viral. Boom—servers melt.

The solution? Microservices, of course.

“They’ll make us resilient.”

Eighteen months later, here’s the score:

- Planned services: 10

- Actual services: 50+

- Deployments? Slower

- Productivity? All over the place

- Incidents? Way up

The trusty old pm2 restart was replaced by a tangled mess of Kubernetes configs. One change now meant coordinating across multiple teams, repos, and CI/CD pipelines.

# Monolith deploy

git pull origin main

npm install

npm run build

pm2 restart app.js

# Microservice deploy

apiVersion: apps/v1

kind: Deployment

metadata:

name: product-service

spec:

replicas: 3

selector:

matchLabels:

app: product

template:

metadata:

labels:

app: product

spec:

containers:

- name: product-service

image: yourdockerhub/product:latest

ports:

- containerPort: 8080

Everything was technically "decoupled." But practically? A mess. No cohesion. Endless friction.

Case Study #2: Welcome to Stack Soup

Next up: “Code-Freeze Corp.” Their monolith was messy, but it worked. Tired of 3 A.M. deploys, they aimed for a sleek microservice world—PHP here, some Java there, and just a sprinkle of Python.

Within a year? Stack chaos.

Each team picked its own tools. No shared guidelines. No documentation keeping up. API contracts? Loose at best. Calls between services often broke without warning.

Deploy scripts turned into rituals of pain:

# Microservice deploy (good luck)

for service in $(ls microservices); do

cd $service && npm run deploy && cd ..

done

They did ship faster. Just... broken stuff. Their shiny agility? Mostly sprinting in circles.

The Real Issue: Architecture Reflects the Org

Here’s the part folks skip: microservices aren't just a tech choice. They’re an organizational one.

If your teams don’t:

- Own their code end-to-end

- Share a monitoring stack

- Automate reliable deploys

- Govern their APIs…

Then splitting your system won’t help. It just spreads the chaos wider.

Tools like Docker, Kubernetes, Terraform—they don’t fix communication gaps. They amplify them.

So... Go Back to the Monolith?

Sometimes? Yeah.

Not because monoliths are trendy. But because they work. They're easier to test. Easier to deploy. Easier to reason about.

Some teams quietly stitch their services back into one codebase. Not a failure—just a survival strategy.

The truth is: architecture should match your team's maturity.

Decomposition makes sense at scale. But break things up too early, and you’ll spend more time wiring pieces together than building real features.

Final Thought: Be Honest About Your Pain

Microservices aren’t a shortcut to speed. They’re a tradeoff. You gain flexibility, sure—but you pay in complexity.

If your monolith is solid, easy to test, and simple to deploy?

That’s not a legacy. That’s a win.

So next time someone says “We need microservices,” ask them this:

“Are we scaling success—or just running from a mess?”