Bridging the Gap: How DevOps Principles Need to Evolve for True MLOps Success

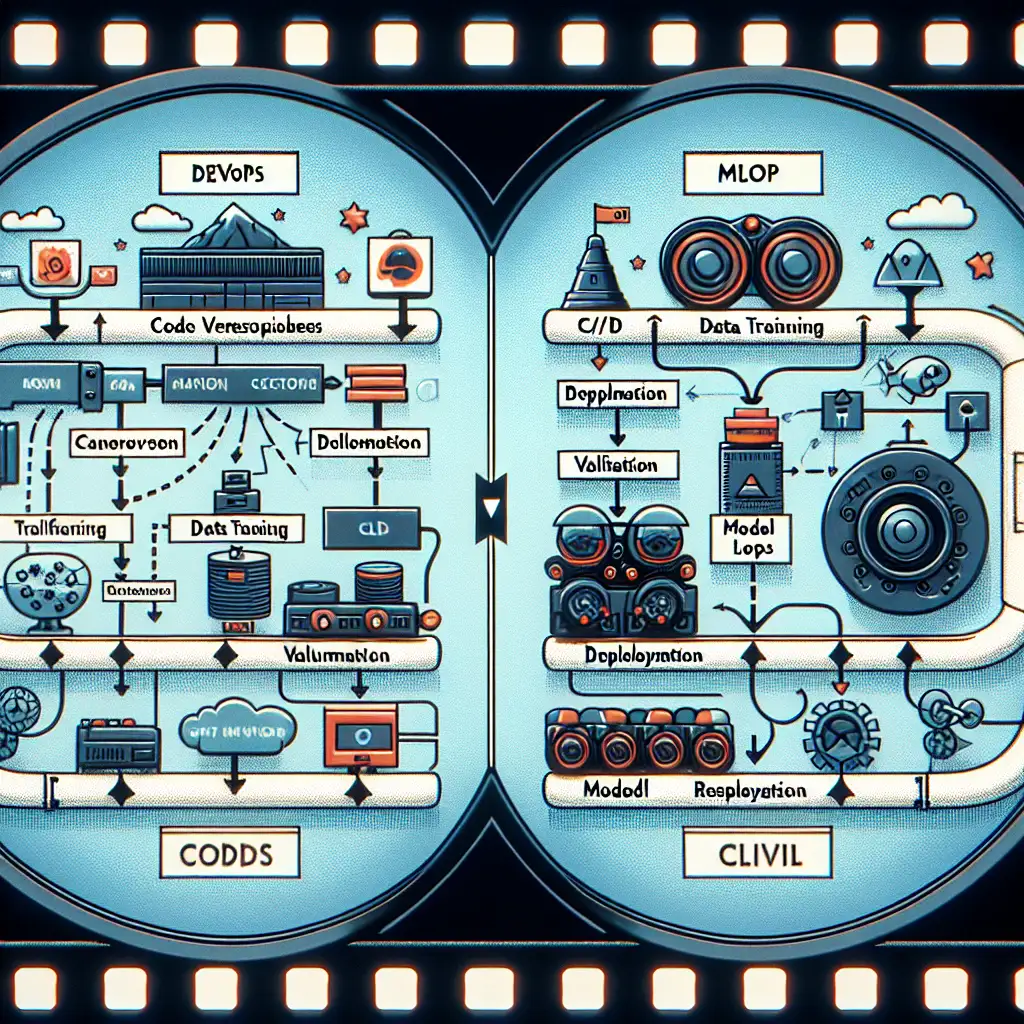

Why trying to shoehorn machine learning workflows into DevOps frameworks will slow your AI initiatives—and what a real MLOps approach looks like instead.

When organizations first began embedding AI into their product lines, many naturally turned to their tried-and-true DevOps frameworks to manage machine learning (ML) projects. After all, DevOps has been the gold standard for software development and deployment—reliable, iterative, and designed for continuous delivery in complex environments.

Yet, as many teams have painfully realized, simply applying traditional DevOps principles to ML pipelines often creates more bottlenecks than it eliminates. The crux of the problem? Machine learning engineering brings unique challenges that don’t neatly fit into classic DevOps molds.

So how do we bridge this gap? How can DevOps evolve to meet the demands of ML and drive true MLOps success? Let’s dive into practical insights and examples that you can start implementing today.

Why Traditional DevOps Falls Short for ML Workflows

DevOps excels with software code: build, test, deploy, monitor. It assumes code is deterministic—meaning it behaves the same when deployed on different machines.

ML workflows break that assumption:

- Data Complexity: The input in ML isn’t just code; it’s data that changes constantly. Models are only as good as their training data.

- Non-deterministic Outputs: Even with identical code and data, models may perform differently due to stochastic training processes.

- Model Versioning & Lineage: Tracking the exact training dataset, hyperparameters, model weights, and environment details is far more complex than version-controlling source code.

- Continuous Training & Feedback Loops: Models require ongoing retraining as data evolves — something beyond one-off code releases.

- Validation Challenges: Testing a model isn’t simply “does it compile?” but “does it generalize well on real-world data?”

Because of these factors, shoehorning ML pipelines into CI/CD tools built for traditional software leads to unreliable deployments, hard-to-reproduce results, and time-consuming debugging.

Evolving DevOps Principles Into True MLOps Practices

1. Introduce Data-Centric Version Control

Traditional Git handles code well but struggles with massive datasets or binary artifacts like model files. Embrace tools like:

- DVC (Data Version Control): Links your datasets and model files with Git commits while enabling large file storage on cloud or local object stores.

- LakeFS or Pachyderm: Provide Git-like semantics for large-scale data lakes.

Example:

With DVC you can version control both your training data and model weights alongside your codebase:

dvc add data/training_set.csv

dvc add models/model_v1.pkl

git add .

git commit -m "Add initial dataset and baseline model"

dvc push

This workflow ensures anyone on the team can replicate exact experiments with matching data and models.

2. Automate Model Training & Validation Pipelines

ML introduces multiple stages beyond just building artifacts:

- Data ingestion/validation

- Feature extraction/transformation

- Model training (often on GPUs)

- Evaluation against test sets

- Bias/fairness analysis

- Deployment readiness checks

Consider orchestrators like Kubeflow Pipelines, MLFlow, or Airflow specialized with ML operators rather than generic Jenkins or CircleCI jobs.

Example flow:

graph TD;

Dataset[Raw Dataset] --> Validation[Data Validation]

Validation --> FeatureEng[Feature Engineering]

FeatureEng --> Training[Model Training]

Training --> Evaluation[Model Evaluation]

Evaluation --> Decision{Performance Threshold Met?}

Decision -- Yes --> Deploy[Deploy Model]

Decision -- No --> Retrain[Retrain with Adjustments]

This more nuanced pipeline automates retraining if evaluation metrics degrade—a critical feedback loop absent in pure DevOps pipelines.

3. Embrace Environment and Dependency Reproducibility

Dev environments must be identical across experiments to ensure reproducibility:

- Use containerization (Docker/Singularity) alongside package managers such as Conda or Poetry.

- Capture hardware dependencies explicitly (e.g., CUDA versions).

- Take snapshots of library versions including ML frameworks (TensorFlow/PyTorch) in use.

Tip: Use tools like ReproZip or experiment tracking suites that log environment metadata automatically alongside results—making rollbacks and audits straightforward.

4. Monitor Models in Production Beyond Infrastructure

DevOps focuses heavily on uptime monitoring—CPU load, memory use, latency. MLOps requires additional observability layers tuned to model behavior:

- Performance drift detection (accuracy drop over time compared to benchmarks).

- Input data schema validation (detect shifts in incoming feature distributions).

- Explainability alerts when feature importance changes unexpectedly.

Tools like Seldon Core, WhyLabs, or custom Prometheus exporters track these metrics post-deployment so you catch silent failures early.

Putting It All Together: A Practical Mini Case Study

Imagine you’re developing an image classification service integrated into a consumer app. Early on you deploy models using a simple Jenkins pipeline copying code and weights straight to production servers. It works until you hit issues:

- One training run uses slightly different preprocessing—metrics fluctuate dramatically but no one notices until customers complain.

- Data scientists manually update training datasets with no record; rollback is guesswork.

- Models degrade silently as new user images diverge from original dataset distribution.

By evolving your process:

- You adopt DVC for dataset + model versioning linked with Git commits.

- Switch Jenkins tasks for Kubeflow Pipelines automating full retrain/evaluate loops triggered weekly or by new batch data arrival.

- Containerize environments fully specifying Python packages + CUDA config ensuring all runs are identical from dev machines to GPU nodes in cloud clusters.

- Implement a monitoring solution checking input distribution drift + inference accuracy from user feedback signals post-deployment.

The result? Predictable deployments with traceable lineage reduce troubleshooting time by 70%, enabling faster iteration cycles—your AI initiatives accelerate instead of stall.

Final Thoughts

The shift from traditional DevOps to true MLOps requires embracing the full lifecycle challenges unique to machine learning — not just deploying static binaries but managing dynamic models linked tightly with evolving data.

If you keep trying to force-fit ML pipelines into old-school DevOps boxes, expect frustration every step of the way.

Instead:

- Treat data as first-class citizen alongside code.

- Build dedicated ML workflows automating retrain/validation loops.

- Enforce rigorous environment reproducibility standards.

- Monitor deployed models’ health beyond infrastructure metrics.

By evolving your principles this way, you’ll bridge the gap effectively—unlocking scalable AI deployments driving true business impact in today’s competitive landscape.

What’s one pain point around deploying machine learning workflows you’ve struggled with? Drop a comment—I’d love to help brainstorm solutions tailored for your setup!