How to Architect Resilient Cloud-to-Cloud Integration Pipelines Without Vendor Lock-in

Forget the hype around one-size-fits-all cloud connectors. The real secret to sustainable cloud-to-cloud integration is designing resilient, decoupled pipelines that anticipate failure modes and keep your data flowing no matter the provider. As enterprises increasingly rely on multiple cloud providers, building integration pipelines that ensure uptime, data integrity, and flexibility is critical to maintaining operational continuity and avoiding costly vendor dependencies.

If you’re tasked with connecting applications across AWS, Azure, GCP, or other cloud platforms, read on for a practical guide on how to architect resilient cloud-to-cloud pipelines—without falling into vendor lock-in traps.

Why Avoid Vendor Lock-in in Cloud-to-Cloud Integration?

Vendor lock-in happens when your integration pipeline depends heavily on proprietary tools or services specific to one cloud provider. While this might speed up initial development, it greatly limits your flexibility to switch providers or adopt best-of-breed services elsewhere. In a multi-cloud world where outages happen, geopolitical restrictions evolve, and pricing models shift rapidly, lock-in can become a major business risk.

Principles of Resilient Cloud-to-Cloud Integration Pipelines

-

Decouple via Open Standards and APIs

Use open protocols such as HTTPS/REST, gRPC, AMQP for messaging instead of proprietary connectors wherever possible. For example, prefer RESTful APIs over AWS Lambda triggers or Azure Event Grid subscriptions tightly coupled to each platform. -

Design for Failure — Expect Provider Outages

Your pipeline should gracefully handle partial outages of any one cloud service by retrying operations, circuit-breaking calls, or shifting workloads dynamically. This prevents cascading failures that bring down entire workflows. -

Use Platform-Agnostic Middleware

Introduce middleware layers (e.g., Kubernetes clusters running on multiple clouds) or containerized message brokers like Kafka or RabbitMQ that abstract the underlying platform differences and provide uniform integration capabilities. -

Implement Idempotency and Data Integrity Checks

Ensure your pipeline supports idempotent operations so data processing can be retried without duplication. Include hashing or checksums for verifying data integrity between cloud boundaries. -

Automate Infrastructure as Code (IaC) with Multi-Cloud Tools

Use Terraform or Pulumi scripts targeting multiple clouds rather than resorting solely to AWS CloudFormation or Azure ARM templates. This allows rapid reprovisioning of equivalent resources anywhere.

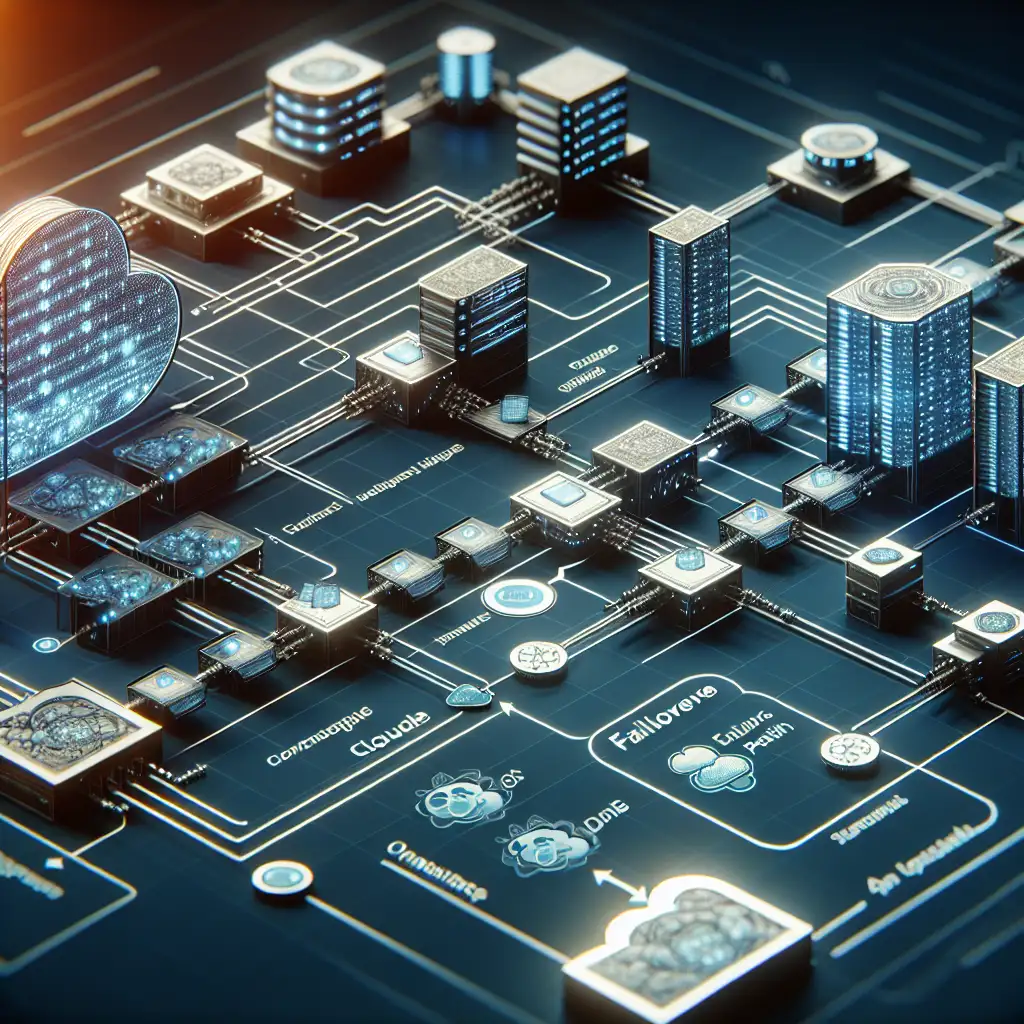

Sample Architecture Blueprint: Multi-Cloud Data Synchronization Pipeline

Suppose your enterprise uses Salesforce hosted in AWS but also employs a GCP-based analytics platform that requires near real-time customer updates. Here's how you could build a resilient integration:

- Data Extraction: Export change data from Salesforce via their open REST API.

- Message Bus: Publish changes into an Apache Kafka cluster deployed on Kubernetes spread across AWS and GCP availability zones.

- Transformation & Validation: A set of containerized microservices running in the Kubernetes cluster consume Kafka topics, cleanse and validate records.

- Delivery: Validated events are forwarded via REST calls to Google Analytics’ ingestion API.

- Fallback Logic: If Google Analytics endpoint is unreachable (detected through circuit breakers), processed events are stored temporarily in persistent storage buckets accessible from both clouds.

- Reconciliation: Batch jobs run regularly comparing source Salesforce data with destination analytics records using hashes to detect mismatches.

By adopting Kafka and Kubernetes — standard tools operable across any cloud — you avoid binding tight couplings with native AWS EventBridge or GCP Pub/Sub which can complicate failover or migration options later.

Tips for Building Your Own Resilient Pipeline

-

Avoid Cloud-Specific SDKs Unless Abstracted: Use abstraction layers like the Apache Camel framework if you must leverage some native SDK features but want an easier path to portability later.

-

Test Cross-Cloud Failover Regularly: Simulate outages by disabling connectivity or stopping components in one cloud and observe if traffic automatically reroutes without data loss.

-

Monitor End-to-End with Unified Observability Tools: Employ monitoring solutions compatible across clouds (Datadog, Prometheus + Grafana) so you get consistent metrics on latency, error rates, throughput irrespective of where components run.

Conclusion

Building resilient cloud-to-cloud integration pipelines isn’t about chasing vendor-specific shortcuts; it’s a disciplined design practice grounded in decoupling systems, embracing open standards, handling failure gracefully, and maintaining strict data integrity controls. By leaning into multi-cloud middleware solutions and automating infrastructure provisioning across providers using unified IaC tools, organizations can future-proof their integrations against vendor lock-in risks—and confidently support mission-critical workflows wherever their clouds may reside.

Want to dive deeper? Next time I’ll walk through implementing event-driven microservices architectures spanning clouds—stay tuned! Meanwhile, drop questions or share your own integration challenges in the comments below.

Empower your integrations today: build pipelines as flexible as your business demands.