How to Streamline Real-Time Analytics by Exporting BigQuery Results to Pub/Sub

Forget batch exports — discover how pushing BigQuery query results directly into Pub/Sub transforms your data flow into a live stream of actionable intelligence, redefining responsiveness in data-driven applications.

In today’s fast-paced data landscape, waiting for periodic batch jobs to complete before analyzing insights can cost your business crucial time. What if you could get those analytics results as they happen, creating a seamless bridge between your analytics layer and real-time messaging infrastructure?

By exporting BigQuery query results directly into Google Cloud Pub/Sub, you can build dynamic data pipelines that make your analytics truly real-time — enabling you to react proactively and gain competitive advantages faster.

In this post, I’ll walk you through why and how to set up this powerful integration, complete with practical tips and code examples.

Why Export BigQuery Results to Pub/Sub?

BigQuery shines at analyzing massive datasets with speed and SQL familiarity. But its standard workflow typically involves running queries on schedule or on-demand, then exporting results to storage or downstream systems as batch files.

That approach introduces latency between when the data is updated/analyzed and when downstream services can act on those results.

Pub/Sub, on the other hand, is Google Cloud’s scalable messaging service designed for real-time event streaming. Connecting BigQuery output directly to Pub/Sub means analytics results become messages published instantly, feeding workflows such as:

- Real-time dashboards

- Trigger-based alerting

- Up-to-the-second recommendation engines

- Event-driven workflows in Dataflow or Cloud Functions

This real-time feed eliminates bottlenecks and lets you build smarter, more interactive applications.

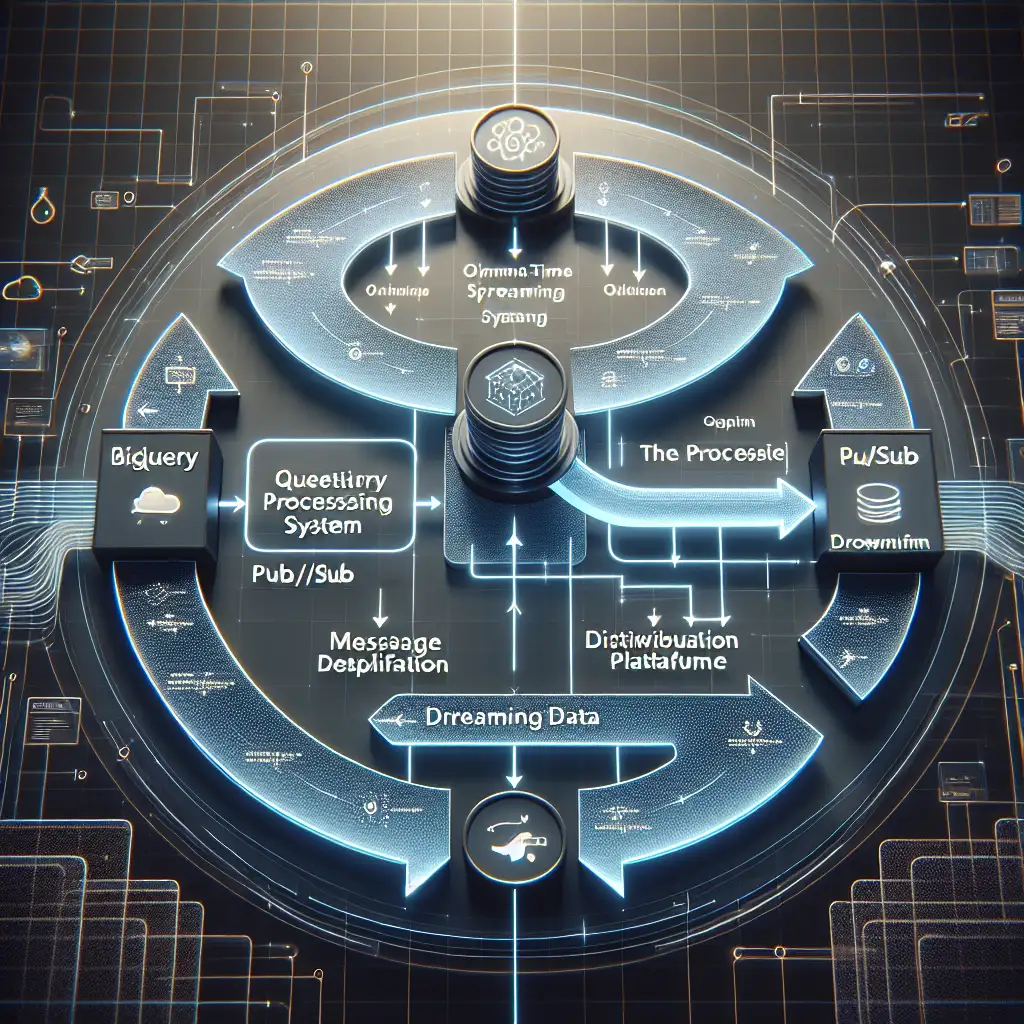

How It Works: The Integration Overview

At a high level, this setup involves:

- Running a BigQuery query that computes insights or transforms source data.

- Exporting the query result rows as messages into a Pub/Sub topic.

- Subscribing downstream systems (e.g., streaming pipelines, microservices) to that topic for immediate consumption.

BigQuery itself doesn’t natively push query results to Pub/Sub out of the box—but you can automate this process by orchestrating queries and publishing rows programmatically using client libraries.

Step-by-Step Guide: Exporting BigQuery Query Results into Pub/Sub

Prerequisites:

- A Google Cloud project with billing enabled.

- BigQuery dataset and tables with your data.

- A Pub/Sub topic created to receive messages.

- Permissions: Your service account needs both BigQuery Data Viewer and Pub/Sub Publisher roles.

- Installed Google Cloud SDK (

gcloud) and Python 3 environment (or any language SDK support).

Step 1: Create a Pub/Sub Topic

Use the console or the command line:

gcloud pubsub topics create bigquery-results-topic

Step 2: Write a Python Script to Query BigQuery and Publish Results

Here’s an example script that runs a SQL query in BigQuery and publishes each row as a JSON message in Pub/Sub:

from google.cloud import bigquery

from google.cloud import pubsub_v1

import json

# Initialize clients

bq_client = bigquery.Client()

publisher = pubsub_v1.PublisherClient()

# Full path of your Pub/Sub topic

project_id = "your-project-id"

topic_id = "bigquery-results-topic"

topic_path = publisher.topic_path(project_id, topic_id)

# Your BigQuery SQL query

query = """

SELECT user_id, event_type, event_timestamp

FROM `your-dataset.your_table`

WHERE event_timestamp >= TIMESTAMP_SUB(CURRENT_TIMESTAMP(), INTERVAL 10 MINUTE)

"""

def run_and_stream():

# Run the query synchronously; for large datasets consider async jobs & pagination

query_job = bq_client.query(query)

results = query_job.result()

for row in results:

# Convert Row object to dict then to JSON string

message_json = json.dumps({

"user_id": row.user_id,

"event_type": row.event_type,

"event_timestamp": row.event_timestamp.isoformat()

})

# Publish message (must be bytes)

future = publisher.publish(topic_path, message_json.encode("utf-8"))

print(f"Published message ID: {future.result()}")

if __name__ == "__main__":

run_and_stream()

Explanation:

- The script runs a time-bounded query fetching recent events.

- Each result row is packaged as JSON and published immediately onto Pub/Sub.

- Downstream subscribers receive these messages almost instantaneously after running the script.

Step 3: Automate Frequent Execution (Optional)

You might want this script to run every few minutes for near-real-time updates.

Options include:

- Using Cloud Scheduler + Cloud Functions: Trigger the function which executes this code on schedule.

- Implementing it inside an always-on streaming app with incremental queries.

Example setting up Cloud Scheduler trigger:

gcloud scheduler jobs create pubsub bigquery-to-pubsub-job \

--schedule="*/5 * * * *" \

--topic=trigger-pubsub-topic \

--message-body="run"

And attaching this trigger topic to invoke the Cloud Function running your pipeline code.

Bonus: Consuming Messages from Your Pub/Sub Topic

Downstream services subscribe like so (Python example):

from google.cloud import pubsub_v1

subscriber = pubsub_v1.SubscriberClient()

subscription_path = subscriber.subscription_path("your-project-id", "your-subscription")

def callback(message):

print(f"Received message: {message.data.decode('utf-8')}")

message.ack()

streaming_pull_future = subscriber.subscribe(subscription_path, callback=callback)

print("Listening for messages...")

try:

streaming_pull_future.result()

except KeyboardInterrupt:

streaming_pull_future.cancel()

This listener receives each new analytics record instantly—perfect for updating dashboards or triggering alerts.

Best Practices & Tips

- Batch vs Streaming: For huge result sets consider batching rows or chunked publishing versus one-by-one.

- Message Size Limits: Keep each Pub/Sub message <10MB; serialize efficiently.

- Idempotency: Design consumers so re-processing messages creates no side effects.

- Error Handling: Robustly handle transient errors during publish calls—retry logic is key.

- Security: Use least privilege IAM roles; consider encryption options if needed.

In Summary

Exporting BigQuery results directly into Pub/Sub creates an event-driven analytics pipeline that powers real-time insight delivery. This approach replaces outdated batch export processes with continuous streams of actionable intelligence — helping you build data applications that respond instantly rather than reactively.

If your organization requires timely analytics-driven actions—whether alerting fraud attempts, adapting offers dynamically, or powering live operational dashboards—this Google Cloud combo unlocks significant speed and agility benefits.

Give it a try today! Bridge your BigQuery analytics output with Pub/Sub messaging—and transform how fast your business sees and uses its data.

Got questions or tips from your own setup? Drop a comment below — I’d love to hear what real-time challenges you’re solving!